Its responses can be deceiving

Sam Altman, CEO of OpenAI, cautioned users in a startlingly direct tweet about ChatGPT, the “interactive, conversational model” built on the company’s GPT-3.5 text generator:

“ChatGPT is incredibly limited but good enough at some things to create a misleading impression of greatness”, he tweeted. “It’s a mistake to be relying on it for anything important right now. It’s a preview of progress; we have lots of work to do on robustness and truthfulness”.

While warning that ChatGPT was a preliminary demo and research release with “a lot of limitations” when OpenAI launched, he also extolled its potential uses in the following ways: “Soon you will be able to have helpful assistants that talk to you, answer questions, and give advice”, he tweeted. “Later, you can have something that goes off and does tasks for you. Eventually, you can have something that goes off and discovers new knowledge for you”.

However, according to this article, ChatGPT has a hidden flaw: It swiftly spews out eloquent, self-assured responses that frequently sound credible and true even when they are not. ChatGPT invents facts, just like other generative big language models. Some people refer to it as “hallucination” or “stochastic parroting” However, these models are trained to anticipate the next word given an input rather than determine if a fact is true or not.

Some people have remarked that ChatGPT stands out because it is so adept at making its hallucinations seem plausible. Benedict Evans, a technology analyst, for instance, requested that ChatGPT “write a bio for Benedict Evans”. He tweeted that the outcome was “plausible, but almost entirely untrue”.

More concerning is the fact that there are uncountable queries for which the user would only be able to determine whether the response was false if they already knew the answer to the submitted inquiry. Professor of computer science at Princeton, Arvind Narayanan, made the following observation in a tweet: “People are excited about using ChatGPT for learning. It’s often very good. But the danger is that you can’t tell when it’s wrong unless you already know the answer. I tried some basic information security questions. In most cases, the answers sounded plausible but were in fact BS”.

Anyway, there is no evidence that the request for this tool is waning: In fact, it appears that OpenAI is having difficulties meeting demand. Some claim to have gotten notes that read: “Whoa there! You might have to wait a bit. Currently, we are receiving more requests than we are comfortable with”.

It appears that those creating these models are keeping their heads down because they are aware of the intense competition that lies ahead, despite the frenetic pace of discourse surrounding ChatGPT, which has ranged from those criticizing Google for allegedly lagging behind the development of LLMs (Large Language Models) to worries about the future of college essays.

However, it seems that Google has never shown any ChatGPT functionality in their products because it would be too expensive and the results wouldn’t be as good in classic research also because of their response latency.

From the perspective of OpenAI, it is evident that the company is employing this time of extensive community experimentation with ChatGPT to obtain RLHF (Reinforcement Learning from Human Feedback) for the highly anticipated upcoming release of GPT-4. Therefore, the more people use ChatGPT, the more the algorithm will be trained.

Of course, even while Stability AI CEO Emad Mostaque makes that claim, he also speaks for those who are frantically developing an open-source version of ChatGPT.

One of the developers of Stable Diffusion (one of the three famous AI image generators, including DALL-E and Midjourney), LAION, claims to be actively engaged in that.

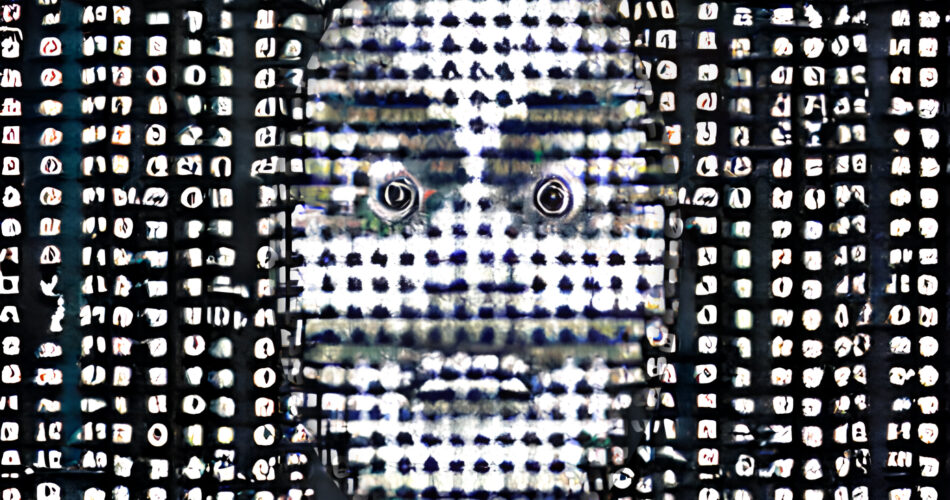

This new tool points out one of the major problems of AIs, namely deception. It doesn’t necessarily have to be made on purpose by the company that developed it, but it happens because of how the algorithm works. If statistically, a sequence of words or requests is more likely to produce some kind of result, the AI will show that because, through its training, that happened. But the problem is that people will inevitably trust a tool that is comfortable, easy to use, and engaging. Therefore, without critical thinking, people will learn fake information. Imagine this chain reaction expanding globally. People will think they know something, but it’s untrue, and their consciousness will be altered.

Meanwhile, even when people are corrected for saying something wrong, like in a school, they could use this tool to pass a test if it’s the case where the AI answers correctly.

Anyway, this tool is going to get better and give increasingly better results, but people should learn not to accept an answer just because it’s easier to get or because it comes from a source they like.