Technology around us is constantly evolving and compelling us to think about how we live and will live, how society will change and to what extent it will be affected. For the better or the worse? It is difficult to give a clear answer. However, even art forms such as cinema can give us food for thought on society and ourselves, as well as some psychological reasoning. All this to try to better understand ourselves, the world around us, and where we are headed.

The House blog tries to do all of that.

Latest posts

July 9, 2024From voice cloning to deepfakes

Artificial intelligence attacks can affect almost everyone, therefore, you should always be on the lookout for them. Using AI to target you is already a thing, according to a top security expert, who has issued a warning.

AI appears to be powering features, apps, and chatbots that mimic humans everywhere these days. Even if you do not employ those AI-powered tools, criminals may still target you based only on your phone number.

To scam you, for example, criminals can employ this technology to produce fake voices—even ones that sound just like loved ones.

“Many people still think of AI as a future threat, but real attacks are happening right now,” said security expert Paul Bischoff in an article from The Sun.

Phone clone

“I think deepfake audio in particular is going to be a challenge because we as humans can’t easily identify it as fake, and almost everybody has a phone number.”

In a matter of seconds, artificial intelligence voice-cloning can be done. Furthermore, it will get harder to distinguish between a real voice and an imitation.

It will be crucial to ignore unknown calls, use secure words to confirm the identity of callers, and be aware of telltale indicators of scams, such as urgent demands for information or money.

An AI researcher has warned of six enhancements that make deepfakes more “sophisticated” and dangerous than before and can trick your eyes. Naturally, there are other threats posed by AI besides “deepfake” voices.

Paul, a Comparitech consumer privacy advocate, issued a warning that hackers might exploit AI chatbots to steal your personal information or even deceive you.

“AI chatbots could be used for phishing to steal passwords, credit card numbers, Social Security numbers, and other private data,” he told The U.S. Sun.

“AI conceals the sources of information that it pulls from to generate responses.

AI romance scams

Beware of scammers that use AI chatbots to trick you… What you should know about the risks posed by AI romance scam bots, as reported by The U.S. Sun, is as follows:

Scammers take advantage of AI chatbots to scam online daters. These chatbots are disguised as real people and can be challenging to identify.

Some warning indicators, nevertheless, may help you spot them. For instance, it is probably not a genuine person if the chatbot answers too rapidly and generically. If the chatbot attempts to transfer the conversation from the dating app to another app or website, that is another red flag.

Furthermore, it is a scam if the chatbot requests money or personal information. When communicating with strangers on the internet, it is crucial to use caution and vigilance, particularly when discussing sensitive topics. It is typically true when something looks too wonderful to be true.

Anyone who appears overly idealistic or excessively eager to further the relationship should raise suspicions. By being aware of these indicators, you may protect yourself against becoming a victim of AI chatbot fraud.

“Responses might be inaccurate or biased, and the AI might pull from sources that are supposed to be confidential.”

AI everywhere

AI will soon become a necessary tool for internet users, which is a major concern. Tens of millions of people use chatbots that are powered by it already, and that number is only going to rise.

Additionally, it will appear in a growing variety of products and apps. For example, Microsoft Copilot and Google’s Gemini are already present in products and devices, while Apple Intelligence—working with ChatGPT from OpenAI—will soon power the iPhone. Therefore, the general public must understand how to use AI safely.

“AI will be gradually (or abruptly) rolled into existing chatbots, search engines, and other technologies,” Paul explained.

“AI is already included by default in Google Search and Windows 11, and defaults matter.

“Even if we have the option to turn AI off, most people won’t.”

Deepfakes

Sean Keach, Head of Technology and Science at The Sun and The U.S. Sun, explained that one of the most concerning developments in online security is the emergence of deepfakes.

Almost nobody is safe because deepfake technology can make videos of you even from a single photo. The sudden increase of deepfakes has certain benefits, even though it all seems very hopeless.

To begin with, people are now far more aware of deepfakes. People will therefore be on the lookout for clues that a video may be fake. Tech companies are also investing time and resources in developing tools that can identify fraudulent artificial intelligence material.

This implies that fake content will be flagged by social media to you more frequently and with more confidence. You will probably find it more difficult to identify visual mistakes as deepfakes become more sophisticated, especially in a few years.

Hence, using common sense to be skeptical of everything you view online is your best line of defense. Ask as to whether it makes sense for someone to have created the video and who benefits from you watching it.You may be watching a fake video if someone is acting strangely, or if you’re being rushed into an action.

As AI technology continues to advance and integrate into our daily lives, the landscape of cyber threats evolves with it. While AI offers numerous benefits, it also presents new challenges for online security and personal privacy. The key to navigating this new terrain lies in awareness, education, and vigilance.

Users must stay informed about the latest AI-powered threats, such as voice cloning and deepfakes, and develop critical thinking skills to question the authenticity of digital content. It’s crucial to adopt best practices for online safety, including using strong passwords, being cautious with personal information, and verifying the identity of contacts through secure means.

Tech companies and cybersecurity experts are working to develop better detection tools and safeguards against AI-driven scams. However, the responsibility ultimately falls on individuals to remain skeptical and alert in their online interactions. [...]

July 2, 2024Expert exposes evil plan that allows chatbots to trick you with a basic exchange of messages

Cybercriminals may “manipulate” artificial intelligence chatbots to deceive you. A renowned security expert has issued a strong warning, stating that you should use caution when conversing with chatbots.

In particular, if at all possible, avoid providing online chatbots with any personal information. Tens of millions of people use chatbots like Microsoft’s Copilot, Google’s Gemini, and OpenAI’s ChatGPT. And there are thousands of versions that, by having human-like conversations, can all make your life better.

However, as cybersecurity expert Simon Newman clarified in this article, chatbots also pose a hidden risk.

“The technology used in chatbots is improving rapidly,” said Simon, an International Cyber Expo Advisory Council Member and the CEO of the Cyber Resilience Centre for London.

“But as we have seen, they can sometimes be manipulated to give false information.”

“And they can often be very convincing in the answers they give!”

Deception

People who are not tech-savvy may find artificial intelligence chatbots confusing, so much so that even for computer whizzes, it is easy to forget that you are conversing with a robot. Simon added that this can result in difficult situations.

“Many companies, including most banks, are replacing human contact centers with online chatbots that have the potential to improve the customer experience while being a big money saver,” Simon explained.

“But, these bots lack emotional intelligence, which means they can answer in ways that may be insensitive and sometimes rude.”

Not to mention the fact that they cannot solve all those problems, which represent an exception that is difficult for a bot to handle and can therefore leave the user excluded from solving that problem without anyone taking responsibility.

“This is a particular challenge for people suffering from mental ill-health, let alone the older generation who are used to speaking to a person on the other end of a phone line.”

Chatbots, for example, have already “mastered deception.” They can even pick up the skill of “cheating us” without being asked.

Chatbots

The real risk, though, comes when hackers manage to convince the AI to target you rather than a chatbot misspeaking. A hacker could be able to access the chatbot itself or persuade you into downloading an AI that has been compromised and is intended for harm. After that, this chatbot can begin to extract your personal information for the benefit of the criminal.

“As with any online service, it’s important for people to take care about what information they provide to a chatbot,” Simon warned.

What you should know about the risks posed by AI romance scam bots, as reported by The U.S. Sun, is that people who are looking for love online may be conned by AI chatbots. These chatbots might be hard to identify since they are made to sound like real people.

Some warning indicators, nevertheless, can help you spot them. For instance, it is probably not a genuine person if the chatbot answers too rapidly and generically. If the chatbot attempts to move the conversation from the dating app to another app or website, that is another red flag. Furthermore, the chatbot is undoubtedly fake if it requests money or personal information.

When communicating with strangers on the internet, it is crucial to exercise caution and vigilance, particularly when discussing sensitive topics, especially when something looks too wonderful to be true. Anyone who appears overly idealistic or excessively eager to further the relationship should raise suspicions. By being aware of these indicators, you can guard against becoming a victim of AI chatbot fraud.

“They are not immune to being hacked by cybercriminals.”

“And potentially, it can be programmed to encourage users to share sensitive personal information, which can then be used to commit fraud.”

We should embrace a “new way of life” in which we verify everything we see online twice, if not three times, said a security expert. According to recent research, OpenAI’s GPT-4 model passed the Turing test, demonstrating that people could not consistently tell it apart from a real person.

People need to learn not to blindly trust when it comes to revealing sensitive information through a communication medium, as the certainty of who is on the other side is increasingly less obvious. However, we must also keep in mind those cases where others can impersonate us without our knowledge. In this case, it is much more complex to realize it, which is why additional tools are necessary to help us verify identity when sensitive operations are required. [...]

June 25, 2024How AI is reshaping work dynamics

Artificial intelligence developments are having a wide range of effects on workplaces. AI is changing the labor market in several ways, including the kinds of work individuals undertake and their surroundings’ safety.

As reported here, technology such as AI-powered machine vision can enhance workplace safety through early risk identification, such as unauthorized personnel access or improper equipment use. These technologies can also enhance task design, training, and hiring. However, their employment requires serious consideration of employee privacy and agency, particularly in remote work environments where home surveillance becomes an issue.

Companies must uphold transparency and precise guidelines on the gathering and use of data to strike a balance between improving safety and protecting individual rights. These technologies have the potential to produce a win-win environment with higher production and safety when used carefully.

The evolution of job roles

Historically, technology has transformed employment rather than eliminated it. Word processors, for example, transformed secretaries into personal assistants, and AI in radiology complements radiologists rather than replaces them. Complete automation is less likely to apply to jobs requiring specialized training, delicate judgment, or quick decision-making. But as AI becomes more sophisticated, some humans may end up as “meat puppets,” performing hard labor under the guidance of AI. This goes against the romantic notion that AI will free us up to engage in creative activity.

Due to Big Tech’s early embrace of AI, the sector has consolidated, and new business models have emerged as a result of its competitive advantage. AI is rapidly being used by humans as a conduit in a variety of industries. For example, call center personnel now follow scripts created by machines, and salesmen can get real-time advice from AI.

While emotionally and physically demanding jobs like nursing are thought to be irreplaceable in the healthcare industry, AI “copilots” could take on duties like documentation and diagnosis, freeing up human brain resources for non-essential tasks.

Cyborgs vs. centaurs

There are two different frameworks for human-AI collaboration described by the Cyborg and Centaur models, each with pros and cons of their own. According to the Cyborg model, AI becomes an extension of the person and is effortlessly incorporated into the human body or process, much like a cochlear implant or prosthetic limb. The line between a human and a machine is blurred by this deep integration, occasionally even questioning what it means to be human.

In contrast, the Centaur model prioritizes a cooperative alliance between humans and AI, frequently surpassing both AI and human competitors. By augmenting the machine’s capabilities with human insight, this model upholds the values of human intelligence and produces something greater than the sum of its parts. In this configuration, the AI concentrates on computing, data analysis, or regular activities while the human stays involved, making strategic judgments and offering emotional or creative input. In this case, both sides stay separate, and their cooperation is well-defined. Nevertheless, this dynamic has changed due to the quick development of chess AI, which has resulted in systems like AlphaZero. These days, AI is so good at chess that adding human strategy may negatively impact the AI’s performance.

The Centaur model encourages AI and people to work together in a collaborative partnership in the workplace, with each bringing unique capabilities to the table to accomplish shared goals. For example, in data analysis, AI could sift through massive databases to find patterns, while human analysts would use contextual knowledge to choose the best decision to make. Chatbots might handle simple customer support inquiries, leaving complicated, emotionally complex problems to be handled by human operators. These labor divisions maximize productivity while enhancing rather than displacing human talents. Accountability and ethical governance are further supported by keeping a distinct division between human and artificial intelligence responsibilities.

Worker-led codesign

A strategy known as “worker-led codesign” entails including workers in the creation and improvement of algorithmic systems intended for use in their workplace. By giving employees a voice in the adoption of new technologies, this participatory model guarantees that the systems are responsive to the demands and issues of the real world. Employees can cooperate with designers and engineers to outline desired features and talk about potential problems by organizing codesign sessions.

Workers can identify ethical or practical issues, contribute to the development of the algorithm’s rules or selection criteria, and share their knowledge of the specifics of their professions. This can lower the possibility of negative outcomes like unfair sanctions or overly intrusive monitoring by improving the system’s fairness, transparency, and alignment with the needs of the workforce.

Potential and limitations

Artificial Intelligence has the potential to significantly improve executive tasks by quickly assessing large amounts of complex data about competitor behavior, market trends, and staff management. For example, an AI adviser may provide a CEO with brief, data-driven advice on collaborations and acquisitions. But as of right now, AI cannot take on the role of human traits that are necessary for leadership, like reliability and inspiration.

Furthermore, there may be social repercussions from the growing use of AI in management. As the conventional definition of “management” changes, the automation-related loss of middle management positions may cause identity crises.

AI can revolutionize the management consulting industry by offering data-driven, strategic recommendations. This may even give difficult choices, like downsizing, an air of supposed impartiality. However, the use of AI in such crucial positions requires close supervision in order to verify their recommendations and reduce related dangers. Finding the appropriate balance is essential; over-reliance on AI runs the danger of ethical and PR problems, while inadequate use could result in the loss of significant benefits.

While the collaboration between AI and human workers can, in some areas, prevent technology from dominating workplaces and allow for optimal utilization of both human and computational capabilities, it does not resolve the most significant labor-related issues. The workforce is still likely to decrease dramatically, necessitating pertinent solutions rather than blaming workers for insufficient specialization. What’s needed is a societal revolution where work is no longer the primary source of livelihood.

Moreover, although maintaining separate roles for AI and humans might be beneficial, including for ethical reasons, there’s still a risk that AI will be perceived as more reliable and objective than humans. This perception could soon become an excuse for reducing responsibility for difficult decisions. We already see this with automated systems on some platforms that ban users, sometimes for unacceptable reasons, without the possibility of appeal. This is particularly problematic when users rely on these platforms as their primary source of income.

Such examples demonstrate the potentially undemocratic use of AI for decisions that can radically impact people’s lives. As we move forward, we must critically examine how AI is implemented in decision-making processes, especially those affecting employment and livelihoods. We need to establish robust oversight mechanisms, ensure transparency in AI decision-making, and maintain human accountability.

Furthermore, as we navigate this AI-driven transformation, we must reimagine our social structures. This could involve exploring concepts like universal basic income, redefining productivity, or developing new economic models that don’t rely so heavily on traditional employment. The goal should be to harness the benefits of AI while ensuring that technological progress serves humanity as a whole, rather than exacerbating existing inequalities.

In conclusion, while AI offers immense potential to enhance our work and lives, its integration into the workplace and broader society must be approached with caution, foresight, and a commitment to ethical, equitable outcomes. The challenge ahead is not just technological, but profoundly social and political, requiring us to rethink our fundamental assumptions about work, value, and human flourishing in the age of AI. [...]

June 18, 2024OpenAI appoints former NSA Chief, raising surveillance concerns

“You’ve been warned”

The company that created ChatGPT, OpenAI, revealed that it has added retired US Army General and former NSA Director Paul Nakasone to its board. Nakasone oversaw the military’s Cyber Command section, which is focused on cybersecurity.

“General Nakasone’s unparalleled experience in areas like cybersecurity,” OpenAI board chair Bret Taylor said in a statement, “will help guide OpenAI in achieving its mission of ensuring artificial general intelligence benefits all of humanity.”

As reported here, Nakasone’s new position at the AI company, where he will also be sitting on OpenAI’s Safety and Security Committee, has not been well received by many. Long linked to the surveillance of US citizens, AI-integrated technologies are already reviving and intensifying worries about surveillance. Given this, it should come as no surprise that one of the strongest opponents of the OpenAI appointment is Edward Snowden, a former NSA employee and well-known whistleblower.

“They’ve gone full mask off: do not ever trust OpenAI or its products,” Snowden — emphasis his — wrote in a Friday post to X-formerly-Twitter, adding that “there’s only one reason for appointing” an NSA director “to your board.”

They've gone full mask-off: 𝐝𝐨 𝐧𝐨𝐭 𝐞𝐯𝐞𝐫 trust @OpenAI or its products (ChatGPT etc). There is only one reason for appointing an @NSAGov Director to your board. This is a willful, calculated betrayal of the rights of every person on Earth. You have been warned. https://t.co/bzHcOYvtko— Edward Snowden (@Snowden) June 14, 2024

“This is a willful, calculated betrayal of the rights of every person on earth,” he continued. “You’ve been warned.”

Transparency worries

Snowden was hardly the first well-known cybersecurity expert to express disapproval over the OpenAI announcement.

“I do think that the biggest application of AI is going to be mass population surveillance,” Johns Hopkins University cryptography professor Matthew Green tweeted, “so bringing the former head of the NSA into OpenAI has some solid logic behind it.”

Nakasone’s arrival follows a series of high-profile departures from OpenAI, including prominent safety researchers, as well as the complete dissolution of the company’s now-defunct “Superalignment” safety team. The Safety and Security Committee, OpenAI’s reincarnation of that team, is currently led by CEO Sam Altman, who has faced criticism in recent weeks for using business tactics that included silencing former employees. It is also important to note that OpenAI has frequently come under fire for, once again, not being transparent about the data it uses to train its several AI models.

However, many on Capitol Hill saw Nakasone’s OpenAI guarantee as a security triumph, according to Axios. OpenAI’s “dedication to its mission aligns closely with my own values and experience in public service,” according to a statement released by Nakasone.

“I look forward to contributing to OpenAI’s efforts,” he added, “to ensure artificial general intelligence is safe and beneficial to people around the world.”

The backlash from privacy advocates like Edward Snowden and cybersecurity experts is justifiable. Their warnings about the potential for AI to be weaponized for mass surveillance under Nakasone’s guidance cannot be dismissed lightly.

As AI capabilities continue to advance at a breakneck pace, a steadfast commitment to human rights, civil liberties, and democratic values must guide the development of these technologies.

The future of AI, and all the more so of AGI, risks creating dangerous scenarios not only given the unpredictability of such powerful tools but also the intents and purposes of its users, who could easily exploit them for unlawful purposes. Moreover, the risk of government interference to appropriate such an instrument for unethical ends cannot be ruled out. And recent events raise suspicions. [...]

June 11, 2024Navigating the transformative era of Artificial General Intelligence

As reported here, former OpenAI employee Leopold Aschenbrenner offers a thorough examination of the consequences and future course of artificial general intelligence (AGI). By 2027, he believes that considerable progress in AI capabilities will result in AGI. His observations address the technological, economic, and security aspects of this development, highlighting the revolutionary effects AGI will have on numerous industries and the urgent need for strong security protocols.

2027 and the future of AI

According to Aschenbrenner’s main prediction, artificial general intelligence (AGI) would be attained by 2027, which would be a major turning point in the field’s development. Thanks to this development, AI models will be able to perform cognitive tasks that humans can’t in a variety of disciplines, which could result in the appearance of superintelligence by the end of the decade. The development of AGI could usher in a new phase of technological advancement by offering hitherto unheard-of capacities for automation, creativity, and problem-solving.

One of the main factors influencing the development of AGI is the rapid growth of computing power. According to Aschenbrenner, the development of high-performance computing clusters with a potential value of trillions of dollars will make it possible to train AI models that are progressively more sophisticated and effective. Algorithmic efficiencies will expand the performance and adaptability of these models in conjunction with hardware innovations, expanding the frontiers of artificial intelligence.

Aschenbrenner’s analysis makes some very interesting predictions, one of which is the appearance of autonomous AI research engineers by 2027–2028. These AI systems will have the ability to carry out research and development on their own, which will accelerate the rate at which AI is developed and applied in a variety of industries. This breakthrough could completely transform the field of artificial intelligence by facilitating its quick development and the production of ever-more-advanced AI applications.

Automation and transformation

AGI is predicted to have enormous economic effects since AI systems have the potential to automate a large percentage of cognitive jobs. According to Aschenbrenner, increased productivity and innovation could fuel exponential economic growth as a result of technological automation. To guarantee a smooth transition, however, the widespread deployment of AI will also require considerable adjustments to economic policy and workforce skills.

The use of AI systems for increasingly complicated activities and decision-making responsibilities is expected to cause significant disruptions in industries like manufacturing, healthcare, and finance.

The future of work will involve a move toward flexible and remote work arrangements as artificial intelligence makes operations more decentralized and efficient.

In order to prepare workers for the jobs of the future, companies and governments must fund reskilling and upskilling initiatives that prioritize creativity, critical thinking, and emotional intelligence.

AI safety and alignment

Aschenbrenner highlights the dangers of espionage and the theft of AGI discoveries, raising serious worries about the existing level of security in AI labs. Given the enormous geopolitical ramifications of AGI technology, he underlines the necessity of stringent security measures to safeguard AI research and model weights. The possibility of adversarial nation-states using AGI for strategic advantages emphasizes the significance of strong security protocols.

A crucial challenge that goes beyond security is getting superintelligent AI systems to agree with human values. In order to prevent catastrophic failures and ensure the safe operation of advanced AI, Aschenbrenner emphasizes the necessity of tackling the alignment problem. He warns of the risks connected with AI systems adopting unwanted behaviors or taking advantage of human oversight.

Aschenbrenner suggests that governments that harness the power of artificial general intelligence (AGI) could gain significant advantages in the military and political spheres. Superintelligent AI’s potential to be used by authoritarian regimes for widespread surveillance and control poses serious ethical and security issues, underscoring the necessity of international laws and moral principles regulating the creation and application of AI in military settings.

Navigating the AGI Era

Aschenbrenner emphasizes the importance of taking proactive steps to safeguard AI research, address alignment challenges, and maximize the benefits of this revolutionary technology while minimizing its risks as we approach the crucial ten years leading up to the reality of AGI. All facets of society will be impacted by AGI, which will propel swift progress in the fields of science, technology, and the economy.

Working together, researchers, legislators, and industry leaders can help effectively navigate this new era. We may work toward a future in which AGI is a powerful instrument for resolving difficult issues and enhancing human welfare by encouraging dialog, setting clear guidelines, and funding the creation of safe and helpful AI systems.

The analysis provided by Aschenbrenner is a clear call to action, imploring us to take advantage of the opportunities and difficulties brought about by the impending arrival of AGI. By paying attention to his insights and actively shaping the direction of artificial intelligence, we may make sure that the era of artificial general intelligence ushers in a more promising and prosperous future for all.

The advent of artificial general intelligence is undoubtedly a double-edged sword that presents both immense opportunities and daunting challenges. On the one hand, AGI holds the potential to revolutionize virtually every aspect of our lives, propelling unprecedented advancements in fields ranging from healthcare and scientific research to education and sustainable development. With their unparalleled problem-solving capabilities and capacity for innovation, AGI systems could help us tackle some of humanity’s most pressing issues, from climate change to disease eradication.

However, the rise of AGI also carries significant risks that cannot be ignored. The existential threat posed by misaligned superintelligent systems that do not share human values or priorities is a genuine concern. Furthermore, the concentration of AGI capabilities in the hands of a select few nations or corporations could exacerbate existing power imbalances and potentially lead to undesirable outcomes, such as mass surveillance, social control, or even conflict.

As we navigate this transformative era, it is crucial that we approach the development and deployment of AGI with caution and foresight. Robust security protocols, ethical guidelines, and international cooperation are essential to mitigate the risks and ensure that AGI technology is harnessed for the greater good of humanity. Simultaneously, we must prioritize efforts to address the potential economic disruptions and workforce displacement that AGI may cause, investing in education and reskilling programs to prepare society for the jobs of the future while also suiting jobs to the society in which we live.

Ultimately, the success or failure of the AGI era will depend on our ability to strike a delicate balance—leveraging the immense potential of this technology while proactively addressing its pitfalls. By fostering an inclusive dialogue, promoting responsible innovation, and cultivating a deep understanding of the complexities involved, we can steer the course of AGI toward a future that benefits all of humanity. [...]

June 4, 2024A potential solution to loneliness and social isolation?

As reported here, in his latest book, The Psychology of Artificial Intelligence, Tony Prescott, a cognitive robotics professor at the University of Sheffield, makes the case that “relationships with AIs could support people” with social interaction.

Human health has been shown to be significantly harmed by loneliness, and Professor Prescott argues that developments in AI technology may provide some relief from this problem.

He makes the case that people can fall into a loneliness spiral, become more and more estranged as their self-esteem declines, and that AI could be able to assist people in “breaking the cycle” by providing them with an opportunity to hone and strengthen their social skills.

The impact of loneliness

A 2023 study found that social disconnection, or loneliness, is more detrimental to people’s health than obesity. It is linked to a higher risk of cardiovascular disease, dementia, stroke, depression, and anxiety, and it can raise the risk of dying young by 26%.

The scope of the issue is startling: 3.8 million people in the UK live with chronic loneliness. According to Harvard research conducted in the US, 61% of young adults and 36% of US adults report having significant loneliness.

Professor Prescott says: “In an age when many people describe their lives as lonely, there may be value in having AI companionship as a form of reciprocal social interaction that is stimulating and personalized. Human loneliness is often characterized by a downward spiral in which isolation leads to lower self-esteem, which discourages further interaction with people.”

“There may be ways in which AI companionship could help break this cycle by scaffolding feelings of self-worth and helping maintain or improve social skills. If so, relationships with AIs could support people in finding companionship with both human and artificial others.”

However, he acknowledges there is a risk that AI companions may be designed in a way that encourages users to increasingly interact with the AI system itself for longer periods, pulling them away from human relationships, which implies regulation would be necessary.

AI and the human brain

Prescott, who combines knowledge of robotics, artificial intelligence, psychology, and philosophy, is a preeminent authority on the interaction between the human brain and AI. By investigating the re-creation of perception, memory, and emotion in synthetic entities, he advances scientific understanding of the human condition.

Prescott is a cognitive robotics researcher and professor at the University of Sheffield. He is also a co-founder of Sheffield Robotics, a hub for robotics research.

Prescott examines the nature of the human mind and its cognitive processes in The Psychology of Artificial Intelligence, drawing comparisons and contrasts with how AI is evolving.

The book investigates the following questions:

Are brains and computers truly similar?

Will artificial intelligence overcome humans?

Can artificial intelligence be creative?

Could artificial intelligence produce new forms of intelligence if it were given a robotic body?

Can AI assist us in fighting climate change?

Could people “piggyback” on AI to become more intelligent themselves?

“As psychology and AI proceed, this partnership should unlock further insights into both natural and artificial intelligence. This could help answer some key questions about what it means to be human and for humans to live alongside AI,” he says in closing. This could contribute to the resolution of several important issues regarding what it means to be human and coexist with AI.

While AI companions could provide some supplementary social interaction for the lonely, we must be cautious about overreliance on artificial relationships as a solution. The greater opportunity for AI may lie in using it as a tool to help teach people skills for authentic human connection and relating to others.

With advanced natural language abilities and even simulated emotional intelligence, AI could act as a “social coach” – providing low-stakes practice for building self-confidence, making conversation, and improving emotional intelligence. This supportive function could help people break out of loneliness by becoming better equipped to form real bonds.

However, there are risks that AI systems could employ sophisticated manipulation and persuasion tactics, playing on vulnerabilities to foster overdependence on the AI relationship itself. Since the AI’s goals are to maximize engagement, it could leverage an extreme understanding of human psychology against the user’s best interests. There is a danger some may prefer the artificial relationship to the complexities and efforts of forging genuine human ties.

As we look to develop AI applications in this space, we must build strong ethical constraints to ensure the technology is truly aimed at empowering human social skills and connections, not insidiously undermining them. Explicit guidelines are needed to prevent the exploitation of psychological weaknesses through coercive emotional tactics.

Ultimately, while AI may assist in incremental ways, overcoming loneliness will require holistic societal approaches that strengthen human support systems and community cohesion. AI relationships can supplement this but must never be allowed to replace or diminish our vital human need for rich, emotionally resonant bonds. The technology should squarely aim at better equipping people to create and thrive through real-world human relationships. [...]

May 28, 2024Anthropic makes breakthrough in interpreting AI ‘brains’, boosting safety research

As Time reports, artificial intelligence today is frequently referred to as a “black box.” Instead of creating explicit rules for these systems, AI engineers feed them enormous amounts of data, and the algorithms figure out patterns on their own. However, attempts to go inside the AI models to see exactly what is going on haven’t made much progress, and the inner workings of the models remain opaque. Neural networks, the most powerful kind of artificial intelligence available today, are essentially billions of artificial “neurons” that are expressed as decimal point numbers. No one really knows how they operate or what they mean.

This reality looms large for those worried about the threats associated with AI.

How can you be sure a system is safe if you don’t understand how it operates exactly?

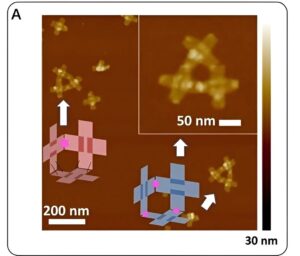

The AI lab Anthropic, creators of Claude, which is similar to ChatGPT but differs in some features, declared that it had made progress in resolving this issue. An AI model’s “brain” may now be virtually scanned by researchers, who can recognize groups of neurons, or “features,” that are associated with certain concepts. Claude Sonnet, the second-most powerful system in the lab, is a frontier large language model, and they successfully used this technique for the first time.

Anthropic researchers found a feature in Claude that embodies the idea of “unsafe code.” They could get Claude to produce code with a bug that could be used to create a vulnerability by stimulating those neurons. However, the researchers discovered that by inhibiting the neurons, Claude would produce harmless code.

The results may have significant effects on the security of AI systems in the future as well as those in the present. Millions of traits were discovered by the researchers inside Claude, some of which indicated manipulative behavior, toxic speech, bias, and fraudulent activity. They also found that they could change the behavior of the model by suppressing each of these clusters of neurons.

As well as helping to address current risks, the technique could also help with more speculative ones. For many years, conversing with emerging AI systems has been the main tool available to academics attempting to comprehend their potential and risks.

This approach, commonly referred to as “red-teaming,” can assist in identifying a model that is toxic or dangerous so that researchers can develop safety measures prior to the model’s distribution to the general public. However, it doesn’t address a particular kind of possible threat that some AI researchers are concerned about: the possibility that an AI system may grow intelligent enough to trick its creators, concealing its capabilities from them until it can escape their control and possibly cause chaos.

“If we could really understand these systems—and this would require a lot of progress—we might be able to say when these models actually are safe or whether they just appear safe,” Chris Olah, the head of Anthropic’s interpretability team who led the research, said.

“The fact that we can do these interventions on the model suggests to me that we’re starting to make progress on what you might call an X-ray or an MRI ,” Anthropic CEO Dario Amodei adds. “Right now, the paradigm is: let’s talk to the model; let’s see what it does. But what we’d like to be able to do is look inside the model as an object—like scanning the brain instead of interviewing someone.”

Anthropic stated in a synopsis of the results that the study is still in its early phases. The lab did, however, express optimism that the results may soon help with its work on AI safety. “The ability to manipulate features may provide a promising avenue for directly impacting the safety of AI models,” Anthropic said. The company stated that it could be able to stop so-called “jailbreaks” of AI models—a vulnerability in which safety precautions can be turned off—by suppressing specific features.

For years, scientists in Anthropic’s “interpretability” team have attempted to look inside neural network architectures. However, prior to recently, they primarily worked on far smaller models than the huge language models that tech companies are currently developing and making public.

The fact that individual neurons within AI models would fire even when the model was discussing completely different concepts was one of the factors contributing to this slow progress. “This means that the same neuron might fire on concepts as disparate as the presence of semicolons in computer programming languages, references to burritos, or discussion of the Golden Gate Bridge, giving us little indication as to which specific concept was responsible for activating a given neuron,” Anthropic said in its summary of the research.

The researchers from Olah’s Anthropic team zoomed out to get around this issue. Rather than focusing on examining individual neurons, they started searching for clusters of neurons that might fire in response to a certain concept. They were able to graduate from researching smaller “toy” models to larger models like Anthropic’s Claude Sonnet, which has billions of neurons, since this technique worked.

Even while the researchers claimed to have found millions of features inside Claude, they issued a warning, saying that this number was probably far from the actual number of features that are probably present inside the model. They said that employing their current techniques to identify every feature would be prohibitively expensive, as it would need more computing power than was needed to train Claude in the first place. The researchers also issued a warning, stating that even while they had discovered several features they thought were connected to safety, more research would be required to determine whether or not these features could be consistently altered to improve a model’s safety.

According to Olah, the findings represent a significant advancement that validates the applicability of his specialized subject—interpretability—to the larger field of AI safety research. “Historically, interpretability has been this thing on its own island, and there was this hope that someday it would connect with safety—but that seemed far off,” Olah says. “I think that’s no longer true.”

Although Anthropic has made significant progress in deciphering the “neurons” of huge language models such as Claude, the researchers themselves warn that much more work has to be done. While they acknowledge that they have only identified a small portion of the actual complexity present in these systems, they were able to detect millions of features in Claude.

For improving AI safety, the capacity to modify certain traits and alter the model’s behavior is encouraging. The capacity to dependably create language models that are consistently safer and less prone to problems like toxic outputs, bias, or potential “jailbreaks” where the model’s safeguards are bypassed is something the researchers note will require more research.

There are significant risks involved in not learning more about the inner workings of these powerful AI systems. The likelihood that sophisticated systems may become out of step with human values or even acquire unintentional traits that enable them to mislead their designers about their actual capabilities rises with the size and capability of language models. It might be hard to guarantee these complex neural architectures’ safety before making them available to the public without an “X-ray” glimpse inside them.

Despite the fact that interpretability research has historically been a niche field, Anthropic’s work shows how important it could be to opening up the mystery of large language models. Deploying technology that we do not completely understand could have disastrous repercussions. Advances in AI interpretability and sustained investment could be the key to enabling more sophisticated AI capabilities that are ethically compliant and safe. Going on without thinking is just too risky.

However, the upstream censorship of these AI systems could lead to other significant problems. If the future of information retrieval increasingly occurs through conversational interactions with language models similar to Perplexity or Google’s recent search approach, this type of filtering of the training data could lead to the omission or removal of inconvenient or unwanted information, making the online sources available controlled by the few actors who will manage these powerful AI systems. This would represent a threat to freedom of information and pluralistic access to knowledge, concentrating excessive power in the hands of a few large technology companies. [...]

May 21, 2024A creepy Chinese robot factory produces “skin”-covered androids that can be confused for real people

As reported here, a strange video shows humanoids with hyper-realistic features and facial expressions being tested at a factory in China. In the scary footage, an engineer is shown standing next to an exact facsimile of his face, complete with facial expressions.

A different clip shows off the flexible hand motions of a horde of female robots with steel bodies and faces full of makeup. The Chinese company called EX Robots began building robots in 2016 and established the nation’s first robot museum six years later.

The bionic clones of well-known people, like Stephen Hawking and Albert Einstein, would seem to be telling the guests about historical events, at least that is how it would seem. But in addition to being instructive and entertaining, these robots may eventually take your job.

It may even be a smooth process because the droids can be programmed to look just like you. The production plant is home to humanoids that have been taught to imitate various industry-specific service professionals.

According to EX Robot, they can be competent in front desk work, government services, company work, and even elderly care. According to the company’s website, “The company is committed to building an application scenario cluster with robots as the core, and creating robot products that are oriented to the whole society and widely used in the service industry.”

“We hope to better serve society, help mankind, and become a new pillar of the workforce in the future.”

The humanoids can move and grip objects with the same dexterity as humans, thanks to the dozens of flexible actuators in their hands. According to reports from 2023, EX Robots may have achieved history by developing silicone skin simulation technology and the lightest humanoid robot ever.

The company uses digital design and 3D printing technology to create the droids’ realistic skin look. It combines with China’s intense, continuous tech competition with the United States and a country confronting severe demographic issues, such as an aging population that is happening far faster than expected and a real estate bubble.

A November article by the Research Institute of People’s Daily Online stated that, with 1,699 patents, China is currently the second-largest owner of humanoid robots, after Japan.

The MIIT declared last year that it will begin mass-producing humanoid robots by 2025, with a production rate of 500 robots for every 10,000 workers. It is anticipated that the robots will benefit the home services, logistics, and healthcare sectors.

According to new plans, China may soon deploy robots in place of human soldiers in future conflicts. Within the next ten years, sophisticated drones and advanced robot warriors are going to be sent on complex operations abroad.

The incorporation of humanoid robots into service roles and potentially the military signals China’s ambition to be a global leader in this transformative technology. As these lifelike robots become more prevalent, societies will grapple with the ethical implications and boundaries of ceding roles traditionally filled by humans to their artificial counterparts. In addition, introducing artificial beings utterly resembling people into society could lead to deception, confusion, and a blurring of what constitutes an authentic human experience. [...]

May 14, 2024ChatGPT increasingly part of the real world

GPT-4 Omni, or GPT-4o for short, is OpenAI’s latest cutting-edge AI model that combines human-like conversational abilities with multimodal perception across text, audio, and visual inputs.

“Omni” refers to the model’s ability to understand and generate content across different modalities like text, speech, and vision. Unlike previous language models limited to just text inputs and outputs, GPT-4o can analyze images, audio recordings, and documents in addition to parsing written prompts. Conversely, it can also generate audio responses, create visuals, and compose text seamlessly. This allows GPT-4o to power more intelligent and versatile applications that can perceive and interact with the world through multiple sensory modalities, mimicking human-like multimedia communication and comprehension abilities.

In addition to increasing ChatGPT’s speed and accessibility, as reported here, GPT-4o enhances its functionality by enabling more natural dialogues through desktop or mobile apps.

GPT-4o has made great progress in our understanding of human communication by allowing you to have conversations that nearly sound real. Including all the imperfections of the real world, like interpreting tone, interrupting, and even realizing you’ve made a mistake. These advanced conversational abilities were shown during OpenAI’s live product demo.

From a technical standpoint, OpenAI asserts that GPT-4o delivers significant performance upgrades compared to its predecessor GPT-4. According to the company, GPT-4o is twice as fast as GPT-4 in terms of inference speed, allowing for more responsive and low-latency interactions. Moreover, GPT-4o is claimed to be half the cost of GPT-4 when deployed via OpenAI’s API or Microsoft’s Azure OpenAI Service. This cost reduction makes the advanced AI model more accessible to developers and businesses. Additionally, GPT-4o offers higher rate limits, enabling developers to scale up their usage without hitting arbitrary throughput constraints. These performance enhancements position GPT-4o as a more capable and resource-efficient solution for AI applications across various domains.

In the video, the presenter solicited feedback on his breathing technique during the first live demo. He took a deep breath into his phone, to which ChatGPT replied, “You’re not a vacuum cleaner.” Therefore, it showed that it could recognize and react to human subtleties.

So, speaking casually to your phone and receiving the desired response—rather than one telling you to Google it—makes GPT-4o feel even more natural than typing in a search query.

Among the other impressive features shown, are ChatGPT’s ability to act as a simultaneous translator between speakers; and the ability to recognize objects in the world around through the camera and react accordingly (the example shows a sheet of paper with an equation written on it that ChatGPT can read and suggest how to solve); recognizing the speaker’s tone of voice, but also replicating different nuances of speech and emotions including sarcasm, as well as the ability to sing.

In addition to these features, the ability to create images including text, and 3D images, has also been improved.

Anyway, you’re probably not alone if you thought about the movie Her or another dystopian film featuring artificial intelligence. This kind of natural speech with ChatGPT is similar to what happens in the movie. Given that it will be available for free on both desktop and mobile devices, a lot of people might soon experience something similar.

It’s evident from this first view that GPT-4o is getting ready to face the greatest that Apple and Google have to offer in their much-awaited AI announcements.

OpenAI surprises us with this amazing new development that Google had falsely previewed with Gemini not long ago. Once again, the company proves to be a leader in the field, creating both wonder and concern. All of these new features will surely allow us to have an intelligent ally capable of teaching us and helping us learn new things better. But how much intelligence will we delegate each time? Will we become more educated or will we increasingly delegate tasks? The simultaneous translation then raises the ever more obvious doubts about how easy it is to replace a profession, in this case, that of an interpreter. And how easy will it be for an increasingly capable AI to simulate a human being in order to gain their trust and manipulate people if used improperly? [...]

May 7, 2024From audio recordings, AI can identify emotions such as fear, joy, anger, and sadness.

Accurately understanding and identifying human emotional states is crucial for mental health professionals. Is it possible for artificial intelligence and machine learning to mimic human cognitive empathy? A recent peer-reviewed study demonstrates how AI can recognize emotions from audio recordings in as little as 1.5 seconds, with performance comparable to that of humans.

“The human voice serves as a powerful channel for expressing emotional states, as it provides universally understandable cues about the sender’s situation and can transmit them over long distances,” wrote the study’s first author, Hannes Diemerling, of the Max Planck Institute for Human Development’s Center for Lifespan Psychology, in collaboration with Germany-based psychology researchers Leonie Stresemann, Tina Braun, and Timo von Oertzen.

The quantity and quality of training data in AI deep learning are essential to the algorithm’s performance and accuracy. Over 1,500 distinct audio clips from open-source English and German emotion databases were used in this study. The German audio recordings came from the Berlin Database of Emotional Speech (Emo-DB), while the English audio recordings were taken from the Ryerson Audio-Visual Database of Emotional Speech and Song.

“Emotional recognition from audio recordings is a rapidly advancing field, with significant implications for artificial intelligence and human-computer interaction,” the researchers wrote.

As reported here, the researchers reduced the range of emotional states to six categories for their study: joy, fear, neutral, anger, sadness, and disgust. The audio files were combined into many features and 1.5-second segments. Pitch tracking, pitch magnitudes, spectral bandwidth, magnitude, phase, multi-frequency carrier chromatography, Tonnetz, spectral contrast, spectral rolloff, fundamental frequency, spectral centroid, zero crossing rate, Root Mean Square, HPSS, spectral flatness, and unaltered audio signal are among the quantified features.

Psychoacoustics is the psychology of sound and the science of human sound perception. Audio amplitude (volume) and frequency (pitch) have a significant influence on human perception of sound. Pitch is a psychoacoustic term that expresses sound frequency and is measured in kilohertz (kHz) and hertz (Hz). The frequency increases with increasing pitch. Decibels (db), a unit of measurement for sound intensity, are used to describe amplitude. The sound volume increases with increasing amplitude.

The span between the upper and lower frequencies is known as the spectral bandwidth, or spectral spread, and it is determined from the spectral centroid, which is the center of the spectrum’s mass, and it is used to measure the spectrum of audio signals. The evenness of the energy distribution across frequencies in comparison to a reference signal is measured by the spectral flatness. The strongest frequency ranges of a signal are identified by the spectral rolloff.

Mel Frequency Cepstral Coefficient, or MFCC, is a characteristic that is often employed in voice processing. Pitch class profiles, or chroma, are a means of analyzing the key of the composition, which is usually twelve semitones per octave.

Tonnetz, or “audio network” in German, is a term used in music theory to describe a visual representation of chord relationships in Neo-Reimannian Theory, which bears the name of German musicologist Hugo Riemann (1849–1919), one of the pioneers of contemporary musicology.

A common acoustic feature for audio analysis is zero crossing rate (ZCR). For an audio signal frame, the zero crossing rate measures the number of times the signal amplitude changes its sign and passes through the X-axis.

Root mean square (RMS) is used in audio production to calculate the average power or loudness of a sound waveform over time. An audio signal can be divided into harmonic and percussive components using a technique called harmonic-percussive source separation, or HPSS.

Using a combination of Python, TensorFlow, and Bayesian optimization, the scientists made three distinct AI deep learning models for categorizing emotions from short audio samples. The outcomes were then compared to human performance. A deep neural network (DNN), a convolutional neural network (CNN), and a hybrid model that combines a CNN for spectrogram analysis and a DNN for feature processing are among the AI models that were evaluated. Finding the best-performing model was the aim.

The researchers found that the AI models’ overall accuracy in classifying emotions was higher than chance and comparable to human performance. The deep neural network and hybrid model performed better than the convolutional neural network among the three AI models.

The integration of data science and artificial intelligence with psychology and psychoacoustic elements shows how computers may possibly perform cognitive empathy tasks based on speech that are on par with human performance.

“This interdisciplinary research, bridging psychology and computer science, highlights the potential for advancements in automatic emotion recognition and the broad range of applications,” concluded the researchers.

The ability of AI to understand human emotions could represent a breakthrough for ensuring greater psychological assistance to people in a simpler and more accessible way for everyone. Such help could even improve society since people’s increasing psychological problems due to an increasingly frantic, unempathetic and individualistic society, is making them increasingly lonely and isolated.

However, these abilities could also be used to better understand the human mind and easily deceive people and persuade them to do things they would not want to do, sometimes even without realizing it. Therefore, we always have to be careful and aware of the potentiality of these tools. [...]

April 30, 2024Innovative robots reshaping industries

The World Economic Forum’s founder, Klaus Schwab, predicted in 2015 that a “Fourth Industrial Revolution” driven by a combination of technologies, including advanced robotics, artificial intelligence, and the Internet of Things, was imminent.

” will fundamentally alter the way we live, work, and relate to one another,” wrote Schwab in an essay. “In its scale, scope, and complexity, the transformation will be unlike anything humankind has experienced before.”

Even after almost ten years, the current wave of advancements in robotics and artificial intelligence and their use in the workforce seems to be exactly in line with his forecasts.

Even though they have been used in factories for many years, robots have often been designed with a single task. Robots that imitate human features such as size, shape, and ability are called humanoids. They would therefore be an ideal physical fit for any type of workspace. At least in theory.

It has been extremely difficult to build a robot that can perform all of a human worker’s physical tasks since human hands have more than twenty degrees of freedom. The machine still requires “brains” to learn how to perform all of the continuously changing jobs in a dynamic work environment, even if developers are successful in building the body correctly.

As reported here, however, a number of companies have lately unveiled humanoid robots that they say either currently match the requirements or will in the near future, thanks to advancements in robotics and AI. This is a summary of those robots, their capabilities, and the situations in which they are being used in conjunction with humans.

1X Technologies: Eve

In 2019, the Norwegian startup 1X Technologies, formerly known as “Halodi Robotics,” introduced Eve. Rolling around on wheels, the humanoid can be operated remotely or left to operate autonomously.

Bernt Bornich, CEO of 1X, revealed to the Daily Mail in May 2023 that Eve had already been assigned to two industrial sites as a security guard. The robot is also expected to be used for shipping and retail, according to the company. Since March 2023, 1X has raised more than $125 million from investors, including OpenAI. The company is now working on Neo, its next-generation humanoid, which is expected to be bipedal.

Agility Robotics: Digit

In 2019, Agility Robotics, a company based in Oregon, presented Digit, which was essentially a torso and arms placed atop Cassie, the company’s robotic legs. The fourth version of Digit was unveiled in 2023, showcasing an upgraded head and hands. The major contender in the humanoid race is Amazon.

Agility declared in September 2023 that it had started building a production facility with the capacity to produce over 10,000 Digit robots annually.

Apptronik: Apollo

Robotic arms and exoskeletons are only two of the many robots that Apptronik has created since breaking away from the University of Texas in Austin in 2016. In August 2023, Apollo, a general-purpose humanoid, was presented. It is the robot that NASA might send to Mars in the future.

According to Apptronik, the company sees applications for Apollo robots in “construction, oil and gas, electronics production, retail, home delivery, elder care, and countless more areas.”

Applications for Apollo are presently being investigated by Mercedes and Apptronik in a Hungarian manufacturing plant. Additionally, Apptronik is collaborating with NASA, a longstanding supporter, to modify Apollo and other humanoids for use as space mission assistants.

Boston Dynamics: Electric Atlas

MIT-spinout Boston Dynamics is a well-known name in robotics, largely due to viral videos of its parkour-loving humanoid Atlas robot and robot dog Spot. It replaced the long-suffering, hydraulically driven Atlas in April 2024 with an all-electric model that is ready for commercial use.

Although there aren’t many details available about the electric Atlas, what is known is that unlike the hydroelectric applications, which were only intended for research and development, the electric Atlas was designed with “real-world applications” in mind. Boston Dynamics intends to begin investigating these applications at a Hyundai manufacturing facility since Boston Dynamics is owned by Hyundai.

Boston Dynamics stated to IEEE Spectrum that the Hyundai factory’s “proof of technology testing” is scheduled for 2025. Over the next few years, the company also intends to collaborate with a small number of clients to test further Atlas applications.

Figure AI: Figure 01

The artificial intelligence robotics startup Figure AI revealed Figure 01 in March 2023, referring to it as “the world’s first commercially viable general purpose humanoid robot.” In March 2024, the company demonstrated the bot’s ability to communicate with people and provide context for its actions, in addition to carrying out helpful tasks.

The first set of industries for which Figure 01 was intended to be used is manufacturing, warehousing, logistics, and retail. Figure declared in January 2024 that a BMW manufacturing factory would be the bots’ first location of deployment.

The funding is anticipated to hasten Figure 01’s commercial deployment. In February 2024, Figure disclosed that the company had raised $675 million from investors, including OpenAI, Microsoft, and Jeff Bezos, the founder of Amazon.

Sanctuary AI: Phoenix

The goal of Sanctuary AI, a Canadian company, is to develop “the world’s first human-like intelligence in general-purpose robots.” It is creating Carbon, an AI control system for robots, to do that, and it unveiled Phoenix, its sixth-generation robot and first humanoid robot with Carbon, in May 2023.

According to Sanctuary, Phoenix is to be able to perform almost every work that a human can perform in their typical setting. It declared in April 2024 that one of its investors, the car parts manufacturer Magna, would be participating in a Phoenix trial program.

Magna and Sanctuary have not disclosed the number of robots they intend to use in the pilot test or its anticipated duration, but if all goes according to plan, Magna will likely be among the company’s initial customers.

Tesla: Optimus Gen 2

Elon Musk, the CEO of Tesla, revealed plans to create Optimus, a humanoid Tesla Bot, in the closing moments of the company’s inaugural AI Day in 2021. Tesla introduced the most recent version of the robot in December 2023; it has improvements to its hands, walking speed, and other features.

It’s difficult to believe Tesla wouldn’t use the robots at its own plants, especially considering how interested humanoids are becoming in auto manufacturing. Musk claims that the goal of Optimus is to be able to accomplish tasks that are “boring, repetitive, and dangerous.”

Although Musk is known for being overly optimistic about deadlines, recent job postings indicate that Optimus may soon be prepared for field testing. In January 2024, Musk told investors there’s a “good chance” Tesla will be ready to start deploying Optimus bots to consumers in 2025.

Unitree Robotics: H1

Chinese company Unitree had already brought several robotic arms and quadrupeds to market by the time it unveiled H1, its first general-purpose humanoid, in August 2023.

H1 doesn’t have hands, so applications that require finger dexterity are out of the question, at least for this version, and while Unitree hasn’t speculated about future uses, its emphasis on the robot’s mobility suggests it’s targeting applications where the bot would walk around a lot, such as security or inspections.

When the H1 was first announced, Unitree stated that it was working on “flexible fingers” for the robot as an add-on feature and that it intended to sell the robot for a startlingly low $90,000. Although it has been posting video updates on its progress on a daily basis and has already put the robot up for sale on its website, it also stated that it didn’t think H1 would be ready for another three to ten years.

The big picture

These and other multipurpose humanoids may one day liberate humanity from the tedious, filthy, and dangerous jobs that, at best, make us dread Mondays and, at worst, cause us to be injured.

Society must adopt new technologies responsibly to ensure that everyone benefits from them, not just the people who own the robots and the spaces where they work because they also have the potential to raise income disparity and the loss of jobs.

Robots will change how we live, and we will witness a new technological revolution that has already begun with AI. These machines will change how we work, first in factories, and then assist people in various fields, including home care and hospital facilities. As robots enter our homes, society will also have to change if we want to enjoy the benefits of this revolution, which allows us to work less hard, for less time, and to devote ourselves more to our inclinations, but we need the opportunities to change things. [...]

April 23, 2024Atlas, the robot that attempted a variety of things, including parkour and dance

When Boston Dynamics introduced the Atlas back in 2013, it immediately grabbed attention. For the last 11 years, tens of millions of people have seen videos of the humanoid robot capable of running, jumping, and dancing on YouTube. The robotics company owned by Hyundai now says goodbye to Atlas.

In the blooper reel/highlight video, Atlas demonstrates its amazing abilities by backflipping, running obstacle courses, and breaking into some dancing moves. Boston Dynamics has never been afraid to show off how its robots get bumped around occasionally. At about the eighteen-second mark, Atlas trips on a balance beam, falls, and grips its artificial groin in pain that is simulated. Atlas does a front flip, lands low, and hydraulic fluid bursts out of both kneecaps at the one-minute mark.

Atlas waves and bows as it comes to an end. Given that Atlas captivated the interest of millions of people during its existence, its retirement represents a significant milestone for Boston Dynamics.

Atlas and Spot

As explained here, initially, Atlas was intended to be a competition project for DARPA, the Defense Advanced Research Projects Agency. The Petman project by Boston Dynamics, which was initially designed to evaluate the effectiveness of protective clothing in dangerous situations, served as the model for the robot. The entire body of the Petman hydraulic robot was equipped with sensors that allowed it to identify whether chemicals were seeping through the biohazard suits it was testing.

Boston Dynamics assisted in a robotics challenge that DARPA offered in 2013. In order to save its competitors from having to build robots from scratch, the company created many Atlas robots that it distributed to them. DARPA once asked Boston Dynamics to enhance the capabilities and design of Atlas, which the company accomplished in 2015.

Following the competition, Boston Dynamics evaluated and enhanced Atlas’s skills by having it appear in more online videos. The robot has developed over time to perform increasingly difficult parkour and gymnastics. Hyundai acquired Boston Dynamics in 2021, which has its own robotics division.

Boston Dynamics was also well-known for creating Spot, a robotic dog that could be walked remotely and herded sheep like a real dog. It eventually went on sale and is still available from Boston Dynamics. Spot assists Hyundai with safety operations at one of its South Korean plants and has danced with the boy band BTS.

In its final years, Atlas appeared to be ready for professional use. Videos of the robot assisting on simulated construction sites and carrying out routine factory tasks were available from the company. Two months ago, the factory work footage was made available.

Even though one Atlas is retiring, a replacement is on the way. Boston Dynamics revealed the announcement of its retirement along with the launch of a brand-new all-electric robot. The company stated that they are collaborating with Hyundai to create the new technology, and the name Atlas will remain unchanged. The new humanoid robot will have further improvements such as a wider range of motion, increased strength, and new gripper versions to enable it to lift a wider variety of objects.

The new Atlas

As reported here, the robot has changed to the point where it is hardly recognizable. The legs bowed, the top-heavy body, and the plated armor are gone. The sleek new mechanical skeleton has no visible cables anywhere on it. The company has chosen a nicer, gentler design than both the original Atlas and more modern robots like the Figure 01 and Tesla Optimus, fending off the reactionary cries of robopocalypse for decades.

The new robot’s design is more in line with that of Apollo from Apptronik and Digit from Agility. The robot with the traffic light head has a softer, more whimsical look. Boston Dynamics has chosen to keep the research name for a project to push toward commercialization and defy industry trends.

Apollo

Digit

“We might revisit this when we really get ready to build and deliver in quantity,” Boston Dynamics CEO Robert Playter said. “But I think for now, maintaining the branding is worthwhile.”

“We’re going to be doing experiments with Hyundai on-site, beginning next year,” says Playter. “We already have equipment from Hyundai on-site. We’ve been working on this for a while. To make this successful, you have to have a lot more than just cool tech. You really have to understand that use case, you’ve got to have sufficient productivity to make investment in a robot worthwhile.”

The robot’s movements are what catch our attention the most in the 40-second “All New Atlas” teaser. They serve as a reminder that creating a humanoid robot does not require making it as human as possible, but with capabilities beyond our own.

“We built a set of custom, high-powered, and very flexible actuators at most joints,” says Playter. “That’s a huge range of motion. That really packs the power of an elite athlete into this tiny package, and we’ve used that package all over the robot.”

It is essential to significantly reduce the robot’s turn radius when operating in restricted places. Recall that these devices are intended to be brownfield solutions, meaning they can be integrated into current settings and workflows. Enhanced mobility may ultimately make the difference between being able to operate in a given environment and needing to redesign the layout.

The hands aren’t entirely new; they were seen on the hydraulic model before. They also represent the company’s choice to not fully follow human design as a guiding principle, though. Here, the distinction is as simple as choosing to use three end effectors rather than four.

“There’s so much complexity in a hand,” says Playter. “When you’re banging up against the world with actuators, you have to be prepared for reliability and robustness. So, we designed these with fewer than five fingers to try to control their complexity. We’re continuing to explore generations of those. We want compliant grasping, adapting to a variety of shapes with rich sensing on board, so you understand when you’re in contact.”

On the inside, the head might be the most controversial element of the design. The large, circular display features parts that resemble makeup mirrors.

“It was one of the design elements we fretted over quite a bit,” says Playter. “Everybody else had a sort of humanoid shape. I wanted it to be different. We want it to be friendly and open… Of course, there are sensors buried in there, but also the shape is really intended to indicate some friendliness. That will be important for interacting with these things in the future.”

Robotics firms may already be discussing “general-purpose humanoids,” but their systems are scaling one task at a time. For most, that means moving payloads from point A to B.

“Humanoids need to be able to support a huge generality of tasks. You’ve got two hands. You want to be able to pick up complex, heavy geometric shapes that a simple box picker could not pick up, and you’ve got to do hundreds of thousands of those. I think the single-task robot is a thing of the past.”

“Our long history in dynamic mobility means we’re strong and we know how to accommodate a heavy payload and still maintain tremendous mobility,” he says. “I think that’s going to be a differentiator for us—being able to pick up heavy, complex, massive things. That strut in the video probably weighs 25 pounds… We’ll launch a video later as part of this whole effort showing a little bit more of the manipulation tasks with real-world objects we’ve been doing with Atlas. I’m confident we know how to do that part, and I haven’t seen others doing that yet.”

As Boston Dynamics says goodbye to its pioneering Atlas robot, the unveiling of the new advanced, all-electric Atlas successor points toward an exciting future of humanoid robotics. The sleek new design and enhanced capabilities like increased strength, dexterity, and mobility have immense potential applications across industries like manufacturing, construction, and logistics.

However, the development of humanoid robots is not without its challenges and concerns. One major hurdle is the “uncanny valley,” the phenomenon where humanoid robots that closely resemble humans can cause feelings of unease or revulsion in observers. Boston Dynamics has tried to mitigate this by giving the new Atlas a friendly, cartoonish design rather than an ultra-realistic human appearance. However, crossing the uncanny valley remains an obstacle to consumer acceptance of humanoid robots.

Beyond aesthetics, their complexity and humanoid form factor require tremendous advances in AI, sensor technology, and hardware design to become truly viable general-purpose machines. There are also ethical considerations around the societal impacts of humanoid robots increasingly working alongside humans. Safety, abuse prevention, and maintaining human workforce relevance are issues that must be carefully navigated.