The research analyzed human perception when people are engaged with human-like robots

According to research by the American Psychological Association published in the journal Technology, Mind, and Behavior reported here, our tendency to humanize robots, especially those who engage with people displaying human-like emotions, may lead us to think they are capable of thinking on their own rather than acting as a response to a program.

“The relationship between anthropomorphic shape, human-like behavior, and the tendency to attribute independent thought and intentional behavior to robots is yet to be understood”, said study author Agnieszka Wykowska, Ph.D., a principal investigator at the Italian Institute of Technology.

“As artificial intelligence increasingly becomes a part of our lives, it is important to understand how interacting with a robot that displays human-like behaviors might induce a higher likelihood of the attribution of intentional agency to the robot”.

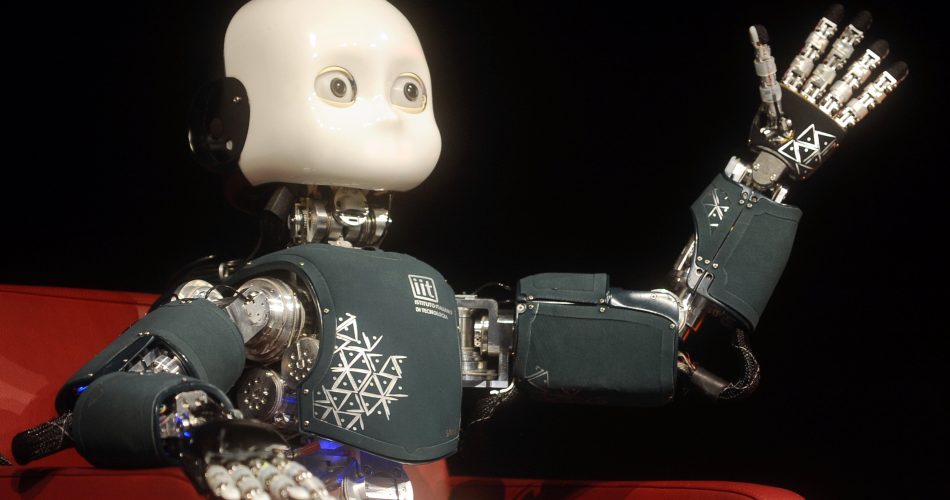

The iCub is a humanoid robot that was used in three trials with 119 participants to see how people would react after interacting with it and watching films together.

The child-sized humanoid robot called iCub was built in Italy and can grab items, crawl, and communicate with humans. It is intended to serve as an open-source platform for robotics, artificial intelligence, and cognitive science research.

Participants responded to questions showing photos of the robot in various scenarios and were asked to select whether they believed each scenario’s purpose was mechanical or intentional before and after interacting with the robot.

In the first two tests, the researchers remotely directed iCub’s behavior so it would act naturally, introducing itself, greeting individuals, and asking for their names. The robot’s eyes had cameras that could detect the faces of the participants and keep eye contact. The participants next viewed three brief documentaries with the robot, which was designed to make sounds and display facial expressions of sadness, awe, or delight in response to the videos.

In the third experiment, the researchers had iCub view films alongside the subjects while being taught to act more like a machine. The robot could not maintain eye contact since the cameras in its eyes were turned off, and it spoke to the participants only in recorded lines regarding the calibration procedure it was going through. Its torso, head, and neck would move repeatedly while emitting a “beep”, replacing any emotional responses to the videos.

Researchers discovered that participants who engaged with both the human-like robot and the machine-like robot were more likely to perceive the robot’s activities as intentional as opposed to programmed. This demonstrates that people do not automatically assume that a robot that resembles a human being can think and feel. Human-like behavior may be essential for being taken seriously as an intentional agent.

These results, in Wykowska’s opinion, suggest that when artificial intelligence gives the appearance of mimicking human behavior, people may be more likely to assume that it is capable of independent thought. She suggested that this might influence the design of social robots in the future.

“Social bonding with robots might be beneficial in some contexts, like with socially assistive robots. For example, in elderly care, social bonding with robots might induce a higher degree of compliance concerning following recommendations regarding taking medication”, Wykowska said.

“Determining contexts in which social bonding and attribution of intentionality are beneficial for the well-being of humans is the next step of research in this area”.

Although human-like robots could seem like a solution for engaging people where they need social assistance, this could lead to manipulative intentions for deceiving people. Therefore, the question is: can a non-human-like robot be similarly useful to a human-like robot but with fewer risks?