Facebook A.I.’s team is developing a touch ability for future robots

Meta Platforms Inc. (ex Facebook) is on the way to allowing robots to perceive touch, thanks to two new sensors it has created but the idea is not limited to robots, it will possibly be part of a higher-level project of realism for the metaverse.

The first is a high-resolution robot fingertip sensor called DIGIT, and the other is a sort of robotic skin called ReSkin, that can help A.I. discern information such as an object’s texture, weight, temperature, and state.

DIGIT

DIGIT consists of a gel-like silicone pad shaped like the tip of your thumb that sits on top of a plastic square that contains sensors, a camera, and PCB lights that line the silicone. Whenever you touch an object with the silicone gel, it creates shadows or changes in color hues in the emerging image that is recorded by the camera.

Tactile sensing along with another sensor will help A.I. have a more human-like perception that can be useful in a variety of fields where touch has to be gentler and safer when handling items or objects, as Meta A.I. researchers Roberto Calandra and Mike Lambeta suggest.

But first off, it’s important sensors can collect information from the things they can touch to make A.I. learn from the multitude of possible parameters a surface can provide such as contact forces and other features.

In this regard, Meta open-sourced the blueprints of DIGIT in 2020, saying it is easy to build, reliable, low-cost, compact, and high-resolution, and now Meta is hoping to take DIGIT to the next level by partnering with a startup called GelSight Inc. to commercialize the sensor to make it more widely available to researchers and speed up innovation.

ReSkin

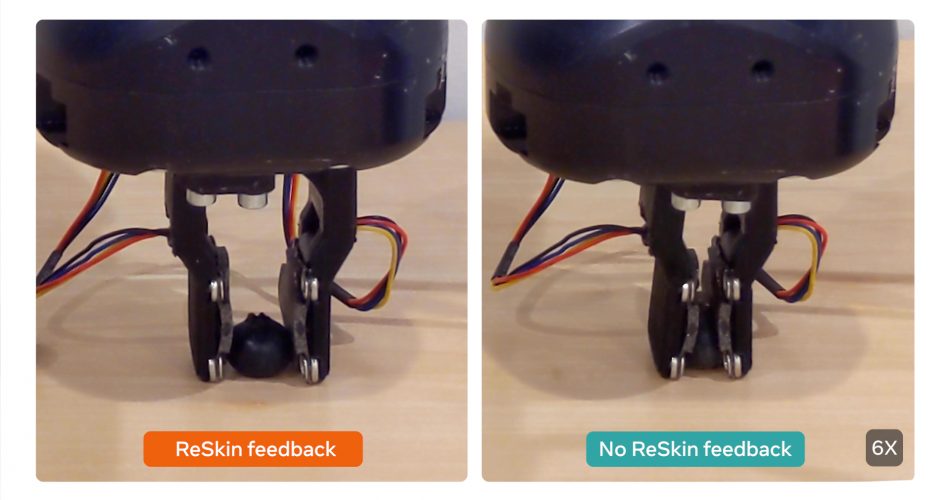

ReSkin, on the other hand, is a completely new sensor that works as a synthetic skin for robot hands useful in a wide range of touch-based tasks, including object classification, proprioception, and robotic grasping, as Meta A.I. researchers Abhinav Gupta and Tess Hellebrekers wrote in a blog post.

Tactile sensing will be very important where high sensitivity is required such as in health care settings, or greater dexterity, such as maneuvering small, soft, or sensitive objects.

A positive aspect of ReSkin is that it’s extremely affordable to manufacture, with Meta saying that 100 pieces can be made for less than $6, or even cheaper in larger quantities. ReSkin is 2 to 3 millimeters thick, has a 50,000-interaction lifespan, and offers a high temporal resolution of up to 400Hz and a spatial resolution of mm with 90% accuracy.

Thanks to its specs, it’s perfect for a variety of applications, including robot hands, tactile gloves, arm sleeves, and even dog shoes. As a result, researchers should be able to obtain a wide range of tactile data that was previously unattainable, or at least extremely difficult and expensive to collect. ReSkin also offers high-frequency, three-axis tactile impulses that can be used to do quick manipulation activities including throwing, slipping, catching, and clapping.

TACTO

To help the research community, Meta has created and open-sourced a simulator called TACTO that allows experimentation even without hardware. Simulators are essential to the advancement of artificial intelligence research in most fields because they allow researchers to test and evaluate hypotheses without having to conduct time-consuming trials in the real world.

“TACTO can render realistic high-resolution touch readings at hundreds of frames per second, and can be easily configured to simulate different vision-based tactile sensors, including DIGIT”, Calandra and Lambeta said.

PyTouch

Researchers, on the other hand, require a way for processing the data and extracting information. Meta is contributing in this area as well, with the establishment of PyTouch, a library of machine learning models that can translate raw sensor values into high-level features, such as detecting slip or recognizing the material with which the sensor has come into contact.

PyTouch allows researchers to train and deploy models across a variety of sensors that provide basic functionality such as detecting contact, slipping, and predicting object posture.

The idea is also to provide researchers the ability to connect a DIGIT sensor, download a pre-trained model, and then use this as the basic building block for their robotic application.

Calandra and Lambeta said that more hardware is required to produce robots with realistic human-like touch sensitivity, such as sensors that can detect object temperature. They must also gain a better understanding of which touch aspects are most relevant for various activities, as well as a deeper comprehension of the appropriate machine learning computational architectures for handling touch data.

This technology can also unlock many possibilities for Augmented Reality and Virtual Reality as well as robotic innovations for the industrial, medical, and agricultural fields.

One more step towards creating robots that have ever more human-like capabilities, as we may have imagined many years ago. Still to be seen is how their artificial brain will contribute to making future robots in relation to the world around them.

Source siliconangle.com