People have trouble recognizing real faces

Artificial intelligence (A.I.)-generated text, audio, image, and video are often being used for nonconsensual sex images, financial fraud, and disinformation campaigns, as well as for “revenge porn” and political propaganda.

AI-generated content has the ability to entertain but also deceive, from synthesizing the speech of anyone’s voice to synthesizing an image of a fictional person, exchanging one person’s identity with another, or manipulating what they are saying in a video.

Dr. Sophie Nightingale of Lancaster University and Professor Hany Farid of the University of California, Berkeley, conducted tests in which participants were asked to tell the difference between StyleGAN2 synthesized faces and real faces, as well as the level of trust the faces prompted.

The results showed that synthetically generated faces are not only photorealistic but also nearly indistinguishable from real faces and are even considered more trustworthy.

“Our evaluation of the photorealism of AI-synthesized faces indicates that synthesis engines have passed through the uncanny valley and are capable of creating faces that are indistinguishable—and more trustworthy—than real faces”.

Generative adversarial networks (GANs) consist of two neural networks against each other: a generator and a discriminator. The generator starts with a random array of pixels and iteratively learns to synthesize a realistic face to create an image of a fictional person. The discriminator learns to differentiate the synthesized face from a corpus of genuine faces with each iteration; if the synthesized face is identifiable from the real faces, the generator is penalized.

The researchers warn that people’s inability to recognize AI-generated visuals may have serious consequences.

“Perhaps most pernicious is the consequence that, in a digital world in which any image or video can be faked, the authenticity of any inconvenient or unwelcome recording can be called into question”.

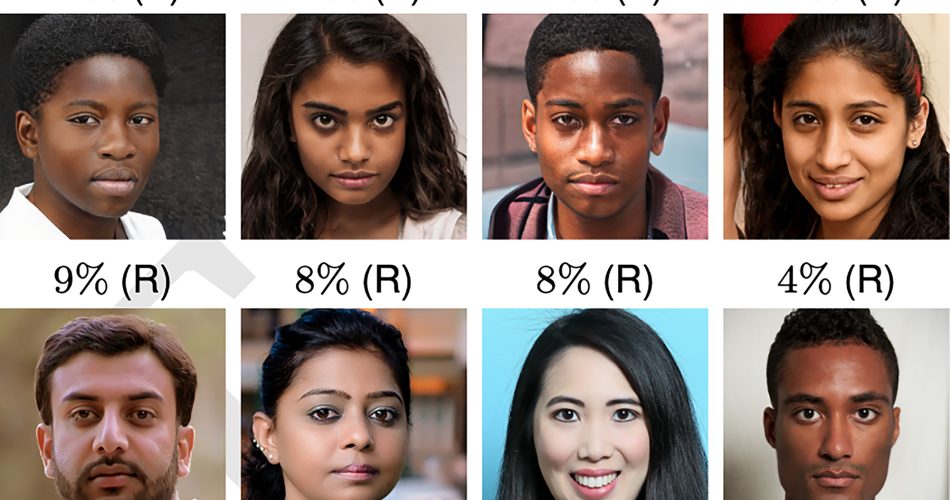

- In the first test, 315 people were asked to classify 128 faces from a total of 800 as either real or fake. Their accuracy percentage was 48%.

- 219 additional volunteers were trained and given feedback on how to classify faces in a second experiment. They classified 128 faces from the same collection of 800 faces as in the first test, but the accuracy rate only improved to 59% despite their training.

So, the researchers wanted to see if people’s trustworthiness perceptions could help them spot fake photos.

“Faces provide a rich source of information, with exposure of just milliseconds sufficient to make implicit inferences about individual traits such as trustworthiness. We wondered if synthetic faces activate the same judgments of trustworthiness. If not, then a perception of trustworthiness could help distinguish real from synthetic faces”.

Therefore, in a third study, 223 individuals were asked to estimate the trustworthiness of 128 faces from a collection of the same 800 faces used before on a scale of 1 (very untrustworthy) to 7 (very trustworthy).

The average trustworthiness of synthetic faces was 7.7% higher than the average trustworthiness of genuine faces, which is statistically significant.

“Perhaps most interestingly, we find that synthetically-generated faces are more trustworthy than real faces”.

In addition, the faces of African Americans were rated as more trustworthy than those of South Asians, but there was no other difference in ratings, while women were considered to be far more trustworthy than men.

“A smiling face is more likely to be rated as trustworthy, but 65.5% of real faces and 58.8% of synthetic faces are smiling, so facial expression alone cannot explain why synthetic faces are rated as more trustworthy”.

Synthesized faces, according to the researchers, may be perceived as more trustworthy since they resemble average faces, which are regarded as more trustworthy.

Anyway, in order to safeguard people against “deep fakes”, they also established standards for the creation and sharing of synthetic images.

Safeguards could include embedding strong watermarks into image and video synthesis networks, which would provide a downstream mechanism for accurate identification. They also suggest rethinking the laissez-faire approach to the public and free publishing of code for anybody to incorporate into any application, because they think it’s the democratization of access to this powerful technology that offers the greatest threat.

“At this pivotal moment, and as other scientific and engineering fields have done, we encourage the graphics and vision community to develop guidelines for the creation and distribution of synthetic-media technologies that incorporate ethical guidelines for researchers, publishers, and media distributors”.

As we already talked about previously, the risks of deepfakes are part of the so-called “fake society” in which we can’t distinguish what’s real from what’s fake since AI can almost manipulate anything. Anyway, I don’t think less democratization of access to this technology would be the solution to reducing risks because this could lead to monopolizing such technology in the hands of a few, which would be more dangerous. I think this will be a battle where there will be A.I.s against A.I.s that will help us to contrast the danger of the former. However, it is frightening how our innate perception can be deceived by an AI. This could mean we could be cheated about something without being aware of it.

Source neurosciencenews.com