The quest for trustworthy Artificial General Intelligence

The rumors around OpenAI’s revolutionary Q* model have reignited public interest in the potential benefits and drawbacks of artificial general intelligence (AGI).

AGI could be taught and trained to do human-level cognitive tasks. Rapid progress in AI, especially in deep learning, has raised both hope and fear regarding the possibility of artificial general intelligence (AGI). AGI could be developed by some companies, including Elon Musk’s xAI and OpenAI. However, this begs the question: Are we moving toward artificial general intelligence (AGI)? Maybe not.

Deep learning limits

As explained here, in ChatGPT and most modern AI, deep learning—a machine learning (ML) technique based on artificial neural networks—is employed. Among other advantages, its versatility in handling various data types and little requirement for pre-processing have contributed to its growing popularity. Many think deep learning will keep developing and be essential to reaching artificial general intelligence (AGI).

Deep learning does have some drawbacks, though. Models reflecting training data require large datasets and costly computer resources. These models produce statistical rules that replicate observed occurrences in reality. To get responses, those criteria are then applied to recent real-world data.

Therefore, deep learning techniques operate on a prediction-focused logic, updating their rules in response to newly observed events. These rules are less appropriate for achieving AGI because of how susceptible they are to the unpredictability of the natural world. The June 2022 accident involving a cruise Robotaxi may have occurred because the vehicle was not trained for the new scenario, which prevented it from making sure decisions.

The ‘what if’ conundrum

The models for AGI, humans, do not develop exhaustive rules for events that occur in the real world. In order to interact with the world, humans usually perceive it in real-time, employing preexisting representations to understand the circumstances, the background, and any additional incidental elements that can affect choices. Instead of creating new rules for every new phenomenon, we adapt and rework the rules that already exist to enable efficient decision-making.

When you encounter a cylindrical object on the ground while hiking a forest trail, for instance, and want to use deep learning to determine what to do next, you must collect data about the object’s various features, classify it as either non-threatening (like a rope) or potentially dangerous (like a snake), and then take appropriate action.

On the other hand, a human would probably start by evaluating the object from a distance, keeping information updated, and choosing a solid course of action based on a “distribution” of choices that worked well in earlier comparable circumstances. This approach makes a minor but noticeable distinction by focusing on defining alternative actions concerning desired outcomes rather than making future predictions.

When prediction is not possible, achieving AGI may require moving away from predictive deductions and toward improving an inductive “what if..?” capacity.

Decision-making under deep uncertainty

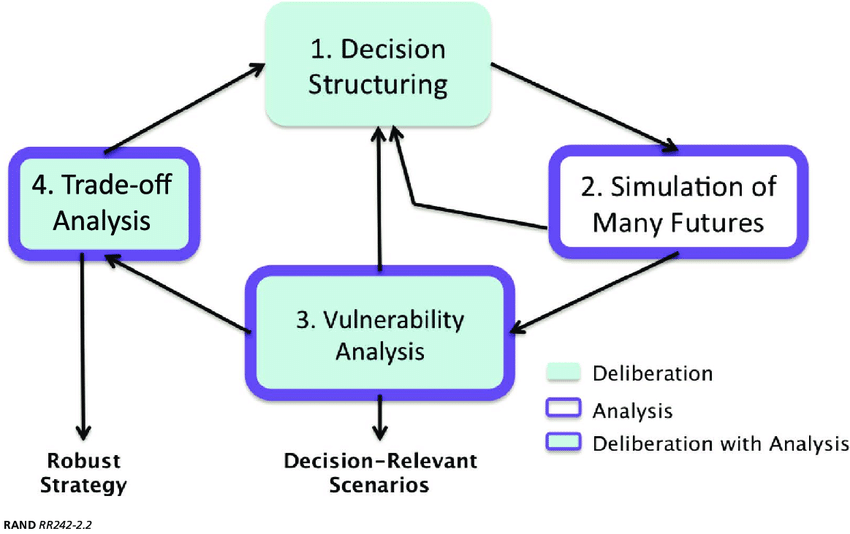

AGI reasoning over choices may be achieved through decision-making under deep uncertainty (DMDU) techniques like Robust Decision-Making. Without the need for ongoing retraining on new data, DMDU techniques examine the vulnerability of possible alternative options in a range of future circumstances. By identifying crucial elements shared by those behaviors that fall short of predefined result criteria, they assess decisions.

The goal is to identify decisions that demonstrate robustness—the ability to perform well across diverse futures. While many deep learning approaches prioritize optimal solutions that might not work in unexpected circumstances, robust alternatives that might compromise optimality for the ability to produce satisfactory results in a variety of environments are valued by DMDU methods. A useful conceptual foundation for creating AI that can handle uncertainty in the real world is provided by DMDU approaches.

Creating a completely autonomous vehicle (AV) could serve as an example of how the suggested methodology is put to use. Simulating human decision-making while driving presents a problem because real-world conditions are diverse and unpredictable. Automotive companies have made significant investments in deep learning models for complete autonomy, yet these models frequently falter in unpredictable circumstances. Unexpected problems are continually being addressed in AV development because it is impractical to model every scenario and prepare for failures.

Robust Decision Making (RDM) key points:

- Multiple possible future scenarios representing a wide range of uncertainties are defined.

- For each scenario, potential decision options are evaluated by simulating their outcomes.

- The options are compared to identify those that are “robust,” giving satisfactory results across most scenarios.

- The most robust options, which perform well across a variety of uncertain futures, are selected.

- The goal is not to find the optimal option for one specific scenario, but the one that works well overall.

- The emphasis is on flexibility in changing environments, not predictive accuracy.

Robust decisioning

Using a robust decision-making approach is one possible remedy. In order to determine if a particular traffic circumstance calls for braking, changing lanes, or accelerating, the AV sensors would collect data in real-time.

If critical factors raise doubts about the algorithmic rote response, the system then assesses the vulnerability of alternative decisions in the given context. This would facilitate adaptation to uncertainty in the real world and lessen the urgent need for retraining on large datasets. A paradigm change like this could improve the performance of autonomous vehicles (AVs) by shifting the emphasis from making perfect forecasts to assessing the few judgments an AV needs to make in order to function.

We may have to shift away from the deep learning paradigm as AI develops and place more emphasis on the significance of decision context in order to get to AGI. Deep learning has limitations for achieving AGI, despite its success in many applications.

In order to shift the current AI paradigm toward reliable, decision-driven AI techniques that can deal with uncertainty in the real world, DMDU methods may offer an initial framework.

The quest for artificial general intelligence continues to fascinate and challenge the AI community. While deep learning has achieved remarkable successes on narrow tasks, its limitations become apparent when considering the flexible cognition required for AGI. Humans navigate the real world by quickly adapting existing mental models to new situations, rather than relying on exhaustive predictive rules.

Techniques like Robust Decision Making (RDM), which focuses on assessing the vulnerabilities of choices across plausible scenarios, may provide a promising path forward. Though deep learning will likely continue to be an important tool, achieving reliable AGI may require emphasizing inductive reasoning and decision-focused frameworks that can handle uncertainty. The years ahead will tell if AI can make the conceptual leaps needed to match general human intelligence. But by expanding the paradigm beyond deep learning, we may discern new perspectives on creating AI that is both capable and trustworthy.