But it doesn’t mean they will be less dangerous

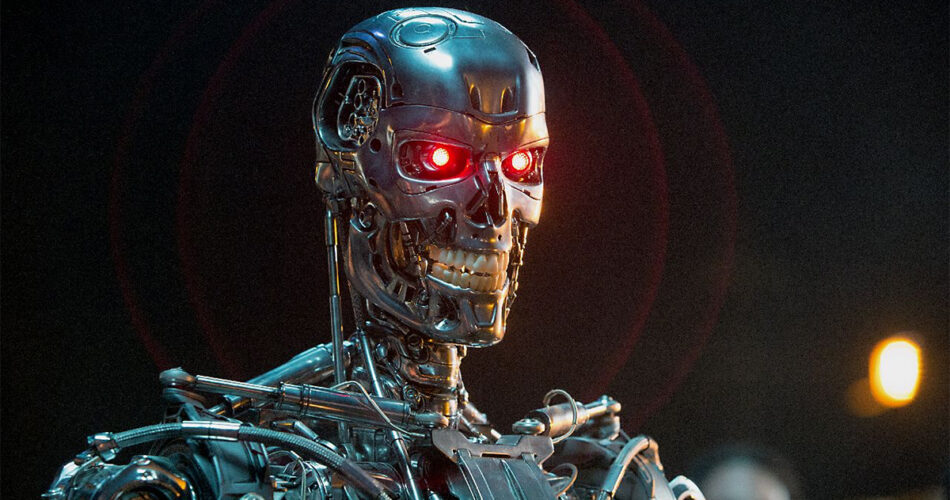

When you think about killer machines, the Terminator and HAL 9000 are the first ones that immediately come to mind. But when you look at Spot by Boston Dynamics, you cannot help but think about a dystopian episode of Black Mirror, and it’s what everybody is still thinking about with the latest robots companies are producing.

However, as explained here, movies provide good prompts, just think that the chief of the CIA’s Office of Technical Service in the US, Robert Wallace, has recalled how Russian spies would study the most recent Bond film to see what technologies might be on the horizon for them, despite these technologies being less cool than you might think.

Nonetheless, killer robots are far from being sentient, and with evil intents. Robots may never be sentient, despite current fears to the contrary. The technology we should be concerned about is far simpler.

The TV news shows us how autonomous drones, tanks, ships, and submarines are changing modern warfare, but these robots aren’t much more advanced than the ones you can buy on your own in a shop. And increasingly, their algorithms are being given authority to decide which targets to locate, follow, and destroy.

This is putting the world in danger and posing a number of ethical, legal, and technological issues. For instance, these weapons will exacerbate the already unstable geopolitical environment. Furthermore, such weapons breach a moral line and bring us to a horrific and terrifying age in which unaccountable machines decide people’s fates.

However, robot developers are beginning to fight back against this scenario. Six major robotics businesses made a promise to never weaponize their robot systems. The companies include Boston Dynamics, which creates the abovementioned Spot as well as the Atlas humanoid robot, which is capable of incredible backflips.

Although robotics companies have previously expressed their concerns about this unsettling future, third-party mount guns have already been seen on clones of Boston Dynamics’ Spot robot, for example. Therefore, although some companies refuse to employ their robots for warfare purposes, others may not do the same.

A first step to protect ourselves from this horrific future is for nations to act as a group, just as they did with chemical, biological, and even nuclear weapons. However, this legislation won’t be perfect but it will stop arms companies from openly marketing these weapons, thereby limiting their spread.

Therefore, the UN Human Rights Council’s recent unanimous decision to examine the human rights implications of new and developing technology like autonomous weaponry is even more significant than a pledge from robotics companies.

The UN has previously been requested by a number of governments to control potential killer robots. Numerous groups and individuals, including the African Union, the European Parliament, the UN Secretary-General, Nobel Peace laureates, politicians, religious figures, and thousands of AI and robotics experts, have urged for regulation, except for Australia, which has not yet lent its support to these calls.

Being already aware of the risks can be a good thing, even though, you know, as Murphy’s law says, “anything that can go wrong will go wrong”. Therefore, if the technology can harm, somebody will inevitably use it for that. We can only try to mitigate the risks but we cannot get rid of them. AI and robots can be very helpful technologies as well as very dangerous weapons. That’s why we need regulation but also a technology able to contrast the dangers and able to protect us. And above all, we should be more aware of how we use technology and its consequences.