A new architecture for a new tech era

The evolution of CPUs has allowed us to reach high computing power, but today it’s time for a step forward with a new architecture such as neuromorphic chips.

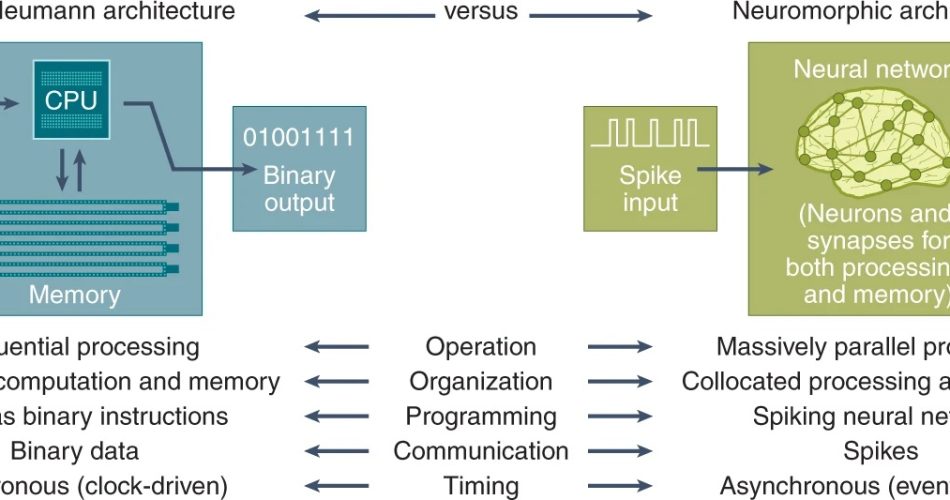

Neuromorphic chips are designed to mimic the functions of neurons in a variety of ways, such as by doing many operations in parallel. Furthermore, just as biological neurons, both compute and store data, neuromorphic hardware frequently aims to unify processors and memory, as opposed to Von Neumann’s approach (like in the current processors), which separates them. This new approach potentially saves energy and time spent transferring data between those components. Furthermore, unlike traditional microchips, which use clock signals emitted at regular intervals to coordinate the operations of circuits, neuromorphic architecture activity often acts in a spiking manner, triggered only when an electrical charge reaches a specific value, much like what happens in our brains.

Researchers integrate neuromorphic circuits with analog computing, which can process continuous signals like real neurons, to make them even more brain-like. The resulting chips are substantially different from existing digital-only computer architecture and computation modes, which rely on binary signal processing of 0s and 1s.

Neuromorphic chips have the potential to reduce the energy consumption of data-intensive computing processes such as AI. Unfortunately, A.I. algorithms haven’t worked well with the analog versions of these chips due to a problem known as device mismatch: due to the manufacturing process, microscopic components within the analog neurons on the chip are mismatched in size. Individual chips aren’t sophisticated enough to conduct the most up-to-date training procedures, thus the algorithms must be trained digitally on computers first.

Now, a paper published in the Proceedings of the National Academy of Sciences has found a solution to the problem. Friedemann Zenke of the Friedrich Miescher Institute for Biomedical Research and Johannes Schemmel of Heidelberg University led a team of researchers who demonstrated that an A.I. algorithm known as a spiking neural network, which uses the brain’s distinct communication signal, known as a spike, could learn how to compensate for device mismatch and work with the chip.

The fact that these chips demand significantly less power to run A.I. algorithms is crucial. IBM’s neuromorphic chip, for example, has five times the transistors of a conventional Intel processor yet uses only 70 milliwatts of electricity. An Intel processor would consume 35 to 140 watts of power, or up to 2000 times more.

All large-scale neuromorphic computers are silicon-based and implemented using conventional complementary metal oxide semiconductor technology; however, the neuromorphic community is investing heavily in the development of new materials for neuromorphic implementations, such as phase-change, ferroelectric, non-filamentary, topological insulators, or channel-doped biomembranes. The use of memristors as the fundamental device to have resistive memory to collocate processing and memory is a prominent strategy in the literature, but other types of devices, such as optoelectronic devices, have also been employed to construct neuromorphic computers. Each device and material used to build neuromorphic computers has its own set of operating characteristics, such as how fast it runs, how much energy it consumes, and how closely it resembles biology. The variety of devices and materials used to implement neuromorphic hardware today offers the opportunity to customize the properties required for a given application.

However, neuromorphic chips are not new, the concept dates back to the 1980s. However, back then, the designs required the integration of certain algorithms inside the semiconductor itself. This meant that one chip would be used to detect motion and another would be used to detect sound. None of the chips worked like our own cortex as a general processor.

This was partly because there hasn’t been any way for programmers to design algorithms that can do much with a general-purpose chip. Building algorithms for these brain-like circuits has remained a challenge, even while they were being created.

Nengo, a compiler that allows developers to create their own algorithms for A.I. applications that run on general-purpose neuromorphic hardware, lies at the heart of these initiatives. Nengo’s utility stems from its use of the well-known Python programming language, which is noted for its clear syntax, as well as its ability to run algorithms on a variety of hardware platforms, including neuromorphic circuits. Anyone who knows Python will soon be able to create powerful neural networks for neuromorphic technology.

Perhaps the most impressive system built using the compiler is Spaun, a project that in 2012 earned international praise for being the most complex brain model ever simulated on a computer. Spaun illustrated how computers can interact seamlessly with their surroundings and execute human-like cognitive tasks such as picture recognition and control of a robot arm that records what it sees. The machine wasn’t perfect, but it was a striking display of how computers can blur the gap between human and machine cognition in the future. Most of Spaun has recently been running 9000x quicker on neuromorphic hardware, using less energy than it would on conventional CPUs, and by the end of 2017, all of Spaun will be operating on neuromorphic hardware.

With the emergence of neuromorphics and tools like Nengo, we may soon have AI capable of displaying a breathtaking degree of natural intelligence right on our phones.

This hardware revolution, combined with the software revolution of the A.I. algorithms, will have outcomes that are hard to imagine, both positively and negatively.

Source wired.co.uk