A single text prompt can generate a drawing

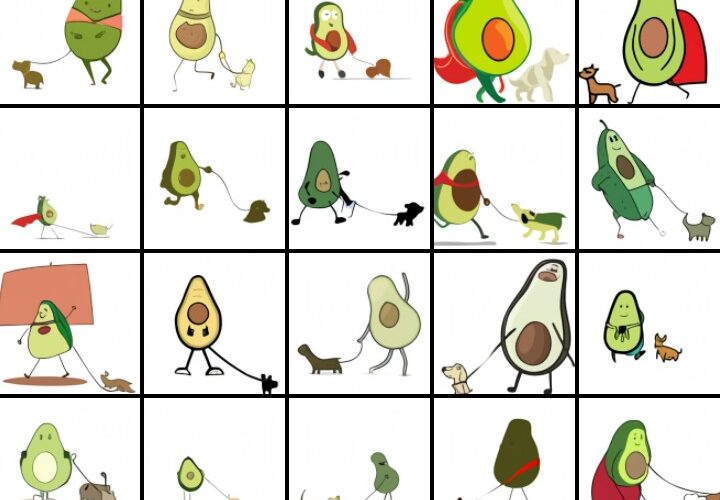

OpenAI, one of the major leaders in artificial intelligence development and owner of the GPT-3 algorithm, showed evidence of new AI capabilities: generating a wide range of drawings and pictures based on simple text prompts by an algorithm called DALL-E (a combination of the name of the Spanish artist Salvador Dalí and the Pixar character WALL-E).

The drawings may look simple but are consistent and quite accurate, and this kind of progress highlights how artificial intelligence is advancing and gaining human-like capabilities. However, it’s also a cause for concern that these programs can learn human biases.

“Text-to-image is very powerful in that it gives one the ability to express what they want to see in language”, said Mark Riedl, associate professor at the Georgia Tech School of Interactive Computing. “Language is universal, whereas the artistic ability to draw is a skill that must be learned over time. If one has an idea to create a cartoon character of Pikachu wielding a lightsaber, that might not be something someone can sit down and draw even if it is something they can explain”. OpenAI found that DALL-E is sometimes able to transfer some human activities and articles of clothing to animals and inanimate objects, such as food items.

DALL-E is the second example of technology from OpenAI in less than a year. In May, the company released the GPT-3 (Generative Pre-trained Transformer 3), one of the most powerful and humanlike text generators, which could generate a coherent article by itself.

DALL-E and GPT-3 are trained on huge datasets including public information on Wikipedia and are built on the transformer neural network model, which was first announced in December 2017 and has been lauded as “particularly revolutionary in natural language processing”. The company has made public enough information to have a basic understanding on how DALL-E works, but the exact details of the data it was trained on remain unknown. And therein lies the concern on how media were created by these systems. In recent years, academics and technology watchdogs have warned that the data used to train these systems can contain societal biases that end up in the output of these systems because algorithmic bias (a value responsible for the activation of a neuron in a neural network) has already begun to show up in algorithms that have powered crucial decisions such as predicting criminal behavior (sending innocents to jail) and grading high-level placement exams (assigning wrong scores to students) with serious consequences.

A study published by researchers from Stanford and McMaster universities found that GPT-3 was persistently biased against Muslims. In nearly a quarter of the study’s test cases, “Muslim” was correlated to “terrorist”.

“While these associations between Muslims and violence are learned during pre-training, they do not seem to be memorized”, the researchers wrote, “rather, GPT-3 manifests the underlying biases quite creatively, demonstrating the powerful ability of language models to mutate biases in different ways, which may make the biases more difficult to detect and mitigate”.

OpenAI’s DALL-E generator is publicly available in a demo online but is limited to phrases chosen by the company. While the illustrated successes are undoubtedly impressive and accurate, it’s hard to know the weaknesses and ethical concerns of the model without being able to test a range of custom words and concepts on it.

List of DALL-E features:

- controlling attributes: i.e. a pentagonal green clock;

- drawing multiple objects: i.e. a small red block sitting on a large green block;

- visualizing perspective and three-dimensionality: i.e. a 3D render of a capybara sitting in a field;

- visualizing the internal and external structure: i.e. a cross-section view of a walnut;

- inferring contextual details: i.e. a painting of a capybara sitting in a field at sunrise;

- combining unrelated concepts: i.e. a snail made of harp (DALL·E can generate animals synthesized from a variety of concepts, including musical instruments, foods, and household items. While not always successful, DALL·E sometimes takes the shapes of the two objects into consideration when determining how to combine them. For example, when prompted to draw “a snail made of harp”, it sometimes relates the pillar of the harp to the spiral of the snail’s shell);

- zero-shot reasoning: i.e. the exact same cat on the top as a sketch on the bottom (GPT-3 can be instructed to perform many kinds of tasks solely from a description and a cue to generate the answer supplied in its prompt, without any additional training. This capability is called zero-shot reasoning);

- geographic knowledge: i.e. a photo of the food of china;

- temporal knowledge: i.e. a photo of a phone from the 20s.

“We do not know if the restricted demo prevents us from seeing more problematic results”, Riedl said. “In some cases, the full prompt used to generate the images is obscured as well. There is an art to phrasing prompts just right and results will be better if the phrase is one that triggers the system to do better”.

OpenAI said it has kept DALL-E from public use in an effort to make sure its new technology isn’t used for wicked means.

“We are committed to conducting additional research and we would not make DALL-E generally available before building in safeguards to mitigate bias and address other safety concerns”, the company said.

There are, of course, societal implications, both from malicious use cases of the technology or unintended biases. OpenAI said in its blog post that models like these have the power to harm society and that it has future plans to look at how DALL-E might contribute to them.

“Bias and misuse are important, industrywide problems that OpenAI takes very seriously as part of our commitment to the safe and responsible deployment of AI for the benefit of all of humanity”, an OpenAI spokesperson said. “Our policy and safety teams are closely involved with research on DALL-E”.

There are a number of positive creative potentials should DALL-E work across a broad range of blended concepts and generate images free of bias and discrimination. Namely, it lets people create a specific image tailored to their needs.

“I do not believe the output of DALL-E is of high enough quality to replace, for example, illustrators, though it could speed up this type of work”, Riedl said.

Riedl noted a few examples, including the generation of pornographic content. Deepfake technology that can seamlessly put faces of one human on another has been used to generate inauthentic media without the consent of people featured in it. Riedl also said people can use keywords and phrases to create images “that are meant to be threatening, disrespectful or hurtful”.

Although it has always been believed that creativity would be the last human resource that technology would be able to replicate, it must be admitted that it is actually making significant strides in this regard as well. If someone didn’t know that DALL-E’s drawings were the result of artificial intelligence, they could easily attribute them to a creator. It’s easy therefore to imagine a future where creatives themselves, or whoever wants to become one, can use a tool like DALL-E to make their own creations, just as there are now many applications to help us with creativity, for example in the field of music, where there are tools that allow us to create a song without knowing music theory. However, the problem could arise when the creators will be exclusively Artificial Intelligence and there will be no more need, or not want to need, of artists as human beings.

In addition to this, as already discussed above, the most serious issue will surely be the neural network bias, since AI will become a standard in several fields, and will probably determine unpleasant outcomes in people’s lives: individuals suspected of crimes only because of statistical data, or people hired for a job only on the basis of previous data. And to some extent, this mentality is already being adopted, just think of how candidates for interviews are called: all on data and ever less for the real background of the person. Therefore, the biggest problem will be the loss of contact with reality and reliance exclusively on tools and data without a critical sense. This already happens in many contexts where laws are applied to the letter without considering the real facts of each case.

Source NBC News