The battle against deepfakes has started

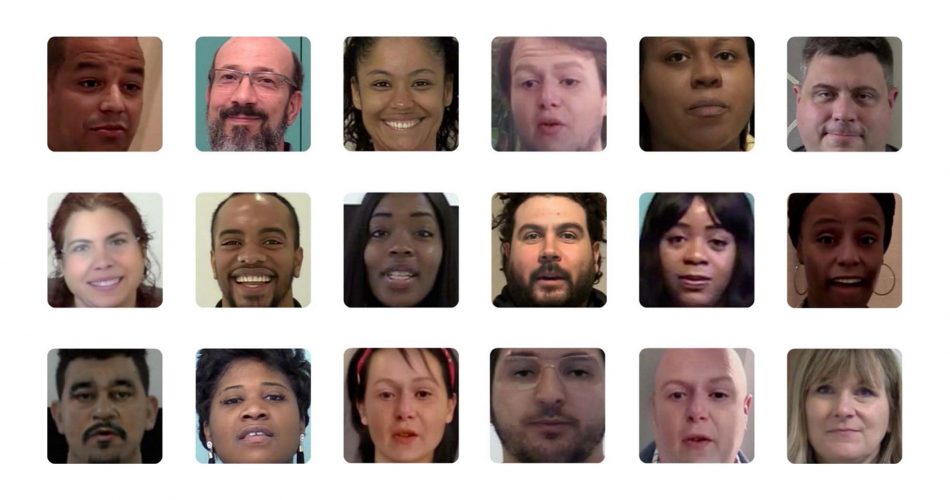

Deepfakes are increasing in number and different fields quickly since the first experiments where we saw replacements of actors’ heads with others or worse when this technique was applied to porn movies with the consequence of making people believe some celebrities really played in those kinds of movies.

If before these deepfakes were sometimes recognizable, now with ever more powerful neural networks, they look more real although still not perfect it’s easy to imagine that they will be increasingly used for creating fake identities, especially in social media profiles.

Facebook knows this is going to happen, so it’s working to stem the problem and find a solution.

Their latest work is a collaboration with academics from Michigan State University (MSU), with the combined team to create a method to reverse-engineer deepfakes: analyzing AI-generated imagery to reveal identifying characteristics of the machine learning model that was created.

Right now, the work is still in the research stage and isn’t ready to be deployed.

Current methods focus on telling whether an image is real or a deepfake (detection) or identifying whether an image was generated by a model seen during training or not (image attribution classification). But solving the problem of proliferating deepfakes requires taking the discussion one step further because a deepfake can be created using a generative model that is not seen in training.

Reverse engineering is a different way of approaching the problem of deepfakes, but it’s not a new concept in machine learning.

By generalizing image attribution recognition, they can infer more information about the generative model used to create a deepfake that goes beyond recognizing that it has not been seen before. And by tracing similarities among patterns of a collection of deepfakes, they could also tell whether a series of images originated from a single source.

This work, led by MSU’s Vishal Asnani, identifies the architectural traits of unknown models. These traits, known as hyperparameters, have to be tuned in each machine-learning model since they leave a unique fingerprint on the finished image that can then be used to identify its source.

Researchers began by running a deepfake image through a Fingerprint Estimation Network (FEN) to estimate details about the fingerprint left by the generative model. Device fingerprints are subtle but unique patterns left on each image produced by a particular device because of imperfections in the manufacturing process. In digital photography, fingerprints are used to identify the digital camera used to produce an image.

Since deepfake software is extremely easy to customize, identifying the traits of unknown models is important as Facebook research lead Tal Hassner said. This potentially allows bad actors to cover their tracks if investigators were trying to trace their activity.

Fingerprints were estimated using different constraints based on the properties of a fingerprint in general, including the fingerprint magnitude, repetitive nature, frequency range, and symmetrical frequency response.

“Let’s assume a bad actor is generating lots of different deepfakes and uploads them on different platforms to different users”, says Hassner. “If this is a new AI model nobody’s seen before, then there’s very little that we could have said about it in the past. Now, we’re able to say, ‘Look, the picture that was uploaded here, the picture that was uploaded there, all of them came from the same model’, And if we were able to seize the laptop or computer [used to generate the content], we will be able to say, ‘This is the culprit'”.

Think of a generative model as a type of car and its hyperparameters as its various specific engine components. Different cars can look similar, but under the hood, they can have very different engines with vastly different components. Our reverse engineering technique is somewhat like recognizing the components of a car based on how it sounds, even if this is a new car we’ve never heard of before.

Hassner compares the work to forensic techniques used to identify which model of camera was used to take a picture by looking for patterns in the resulting image. “Not everybody can create their own camera, though”, he says. “Whereas anyone with a reasonable amount of experience and standard computer can cook their own model that generates deepfakes”.

Not only can the resulting algorithm fingerprint the traits of a generative model, but it can also identify which known model created an image and whether an image is a deepfake in the first place.

However, it’s important to note that even these state-of-the-art results are far from reliable. When Facebook held a deepfake detection competition last year, the winning algorithm was only able to detect AI-manipulated videos 65.18% of the time. Researchers involved said that spotting deepfakes using algorithms is still very much an “unsolved problem”.

Part of the reason for this is that the field of generative AI is extremely active. New techniques are published every day, and it’s nearly impossible for any filter to keep up.

Those involved in the field are keenly aware of this dynamic, and when asked if publishing this new fingerprinting algorithm will lead to research that can go undetected by these methods, Hassner agrees. “I would expect so”, he says. “This is a cat and mouse game, and it continues to be a cat and mouse game”.

Deepfakes are upsetting the digital world. If in the field of cinema, graphics, and video games, they will really be a revolution, the issues that they will arise because of false identities will be countless. Therefore, it is right to have a weapon to counteract the misuse of this medium. Nevertheless, we must also imagine the positive sides of a false identity. If we consider the excessive violations of privacy or countries with a high level of censorship, a false identity could help those who take risks in expressing their ideas freely or those who don’t want to leave too many traces of themselves. This is very different from stealing someone else’s identity since thanks to new algorithms it is possible to create a realistic face that never existed.

As always, every technology is either dangerous or beneficial, depending on the context in which you are in.

Source theverge.com; facebook